Getting Started with OpenRouter – OpenRouter is a middleware platform that provides access to a range of AI models, including the latest OpenAI GPT-4.5 and o1 model, GPT-4o, Grok xAI, LLama 3.1, Anthropic’s Claude, and more.

This platform allows developers to use multiple AI models with a single API key.

OpenRouter’s API is also compatible with OpenAI’s client API, making it easy for developers who are already familiar with OpenAI’s API to integrate OpenRouter into their applications.

OpenRouter offers several free artificial intelligence models, including Nous: Hermes 3 405B Instruct, Meta: Llama 3.1 8B Instruct, and Mistral: Mistral 7B Instruct.

It has similar functionalities and offering like both Groq and Kluster.ai.

TL;DR

Hide- OpenRouter is a middleware platform that provides access to a range of AI models, including the latest OpenAI o1 model, GPT-4o, LLama 3.1, Anthropic's Claude, and more.

- OpenRouter's API is compatible with OpenAI's client API, making it easy for developers familiar with OpenAI's API to integrate OpenRouter into their applications.

- OpenRouter offers several free artificial intelligence models, including Nous: Hermes 3 405B Instruct, Meta: Llama 3.1 8B Instruct, and Mistral: Mistral 7B Instruct.

- To get started with OpenRouter, users need to create an account, obtain an API key, and then build an AI application using the platform.

- The article provides a step-by-step guide on how to use OpenRouter, including creating an account, obtaining an API key, and developing an AI application.

- OpenRouter has implemented an API compatible with OpenAI, which allows for easy integration with LangChain's ChatOpenAI class in a Large Language Model (LLM) application.

- The article also covers how to use OpenRouter with LiteLLM, a Python library that allows users to interact with various LLMs, including OpenRouter.

- The article provides a list of free AI models available on OpenRouter, including their model names and functions.

- The article also covers how to use OpenRouter's Chat Playground and LLM Desktop Apps instead of coding.

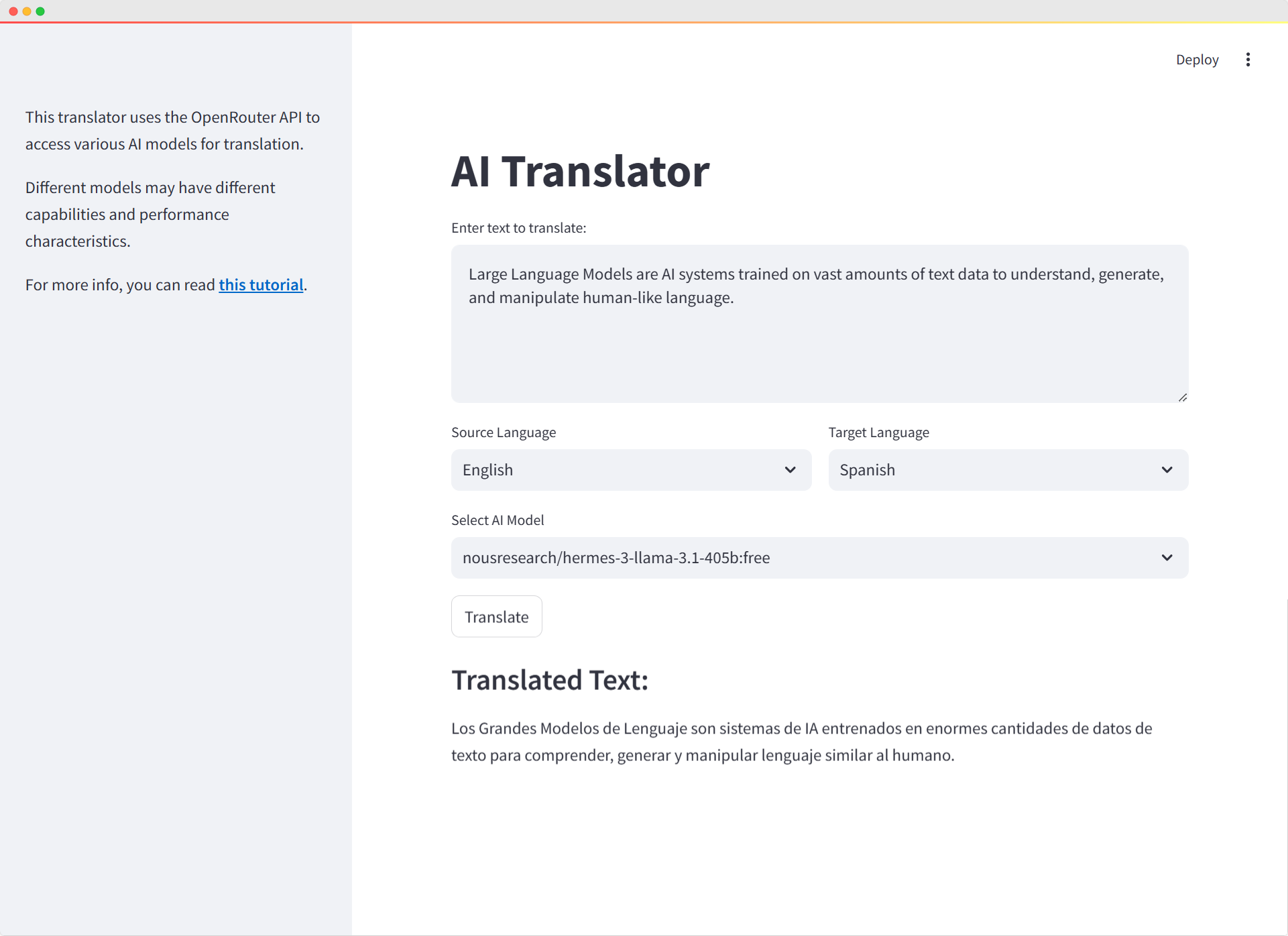

- The article demonstrates how to create a simple AI translator web app using OpenRouter API, Python, and Streamlit.

- The article provides a complete Python script for building the AI translator app, including the necessary libraries and functions.

- The article concludes by highlighting the benefits of using OpenRouter and Streamlit to build AI-powered applications.

This article will provide a step-by-step guide on how to use OpenRouter, including creating an account, obtaining an API key, and developing an AI application.

Get Started with OpenRouter

To get started with OpenRouter, you’ll need to set up an account, obtain an API key, and then build an AI application using the platform.

1. Create an OpenRouter Account

If you don’t have an account, then create an account at https://openrouter.ai. Otherwise please skip this step.

2. Get an API Key

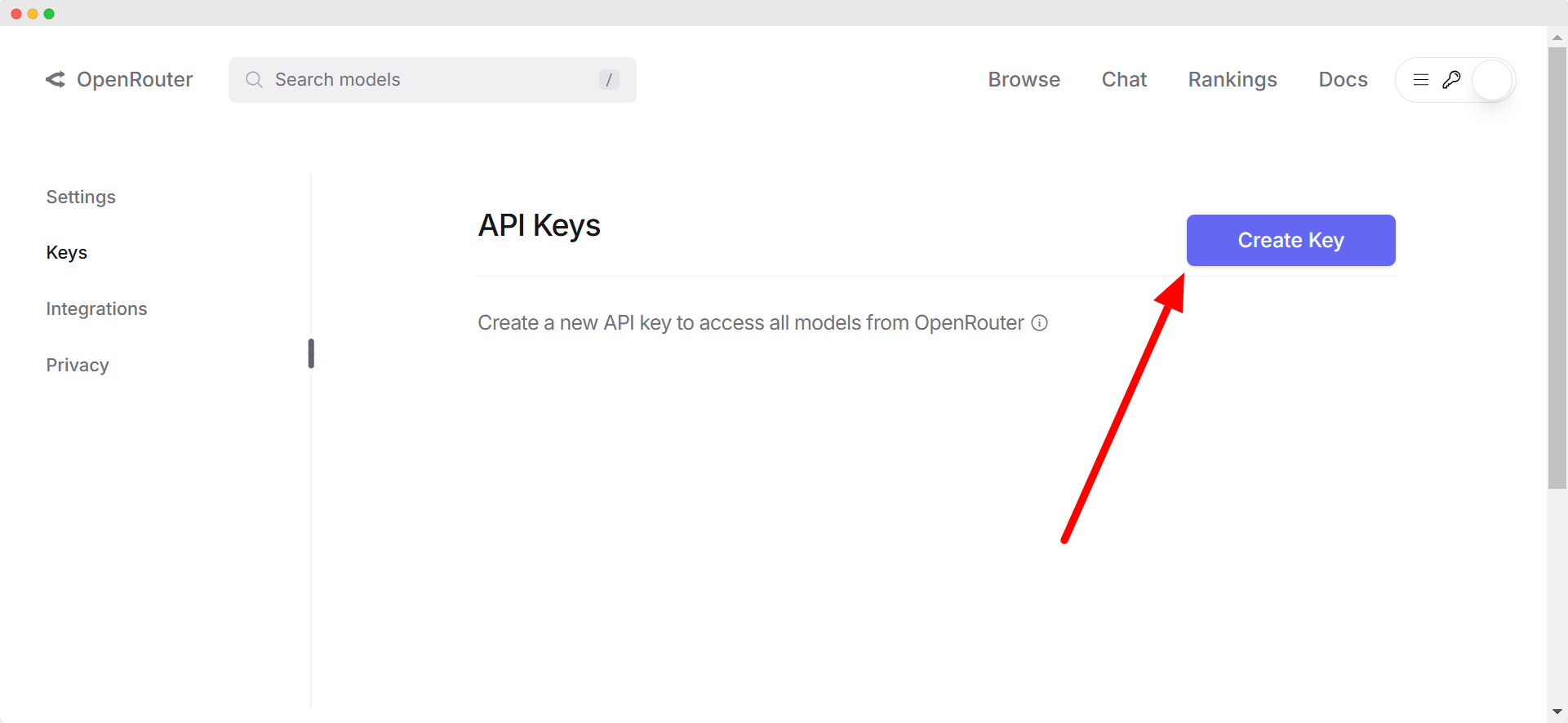

Visit https://openrouter.ai/keys and click “Create Key“.

Name your key then setup a credit limit if necessary.

If you set a credit limit, then the API key will stop working when the total credits (in USD) used by the API key reach or exceed this amount. Enter no value for no limit.

If you plan to just use the free AI models that have no cost, then just leave it blank as well.

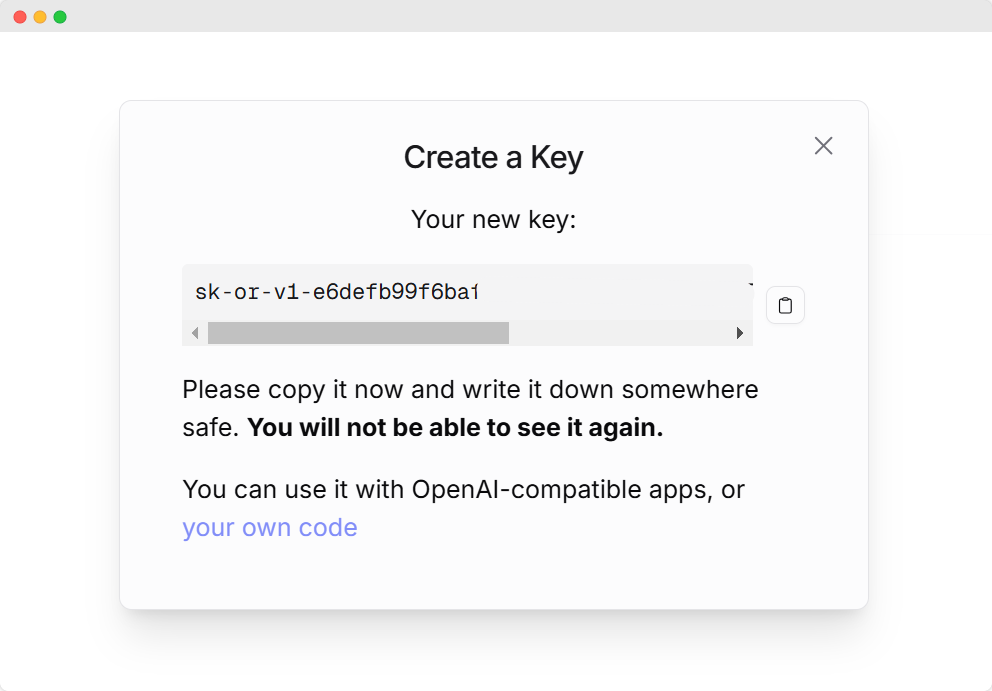

Copy and save your OpenRouter API key to a safe place because once you close the modal, you will not be able to see your API key again later.

3. Add Credit Balance

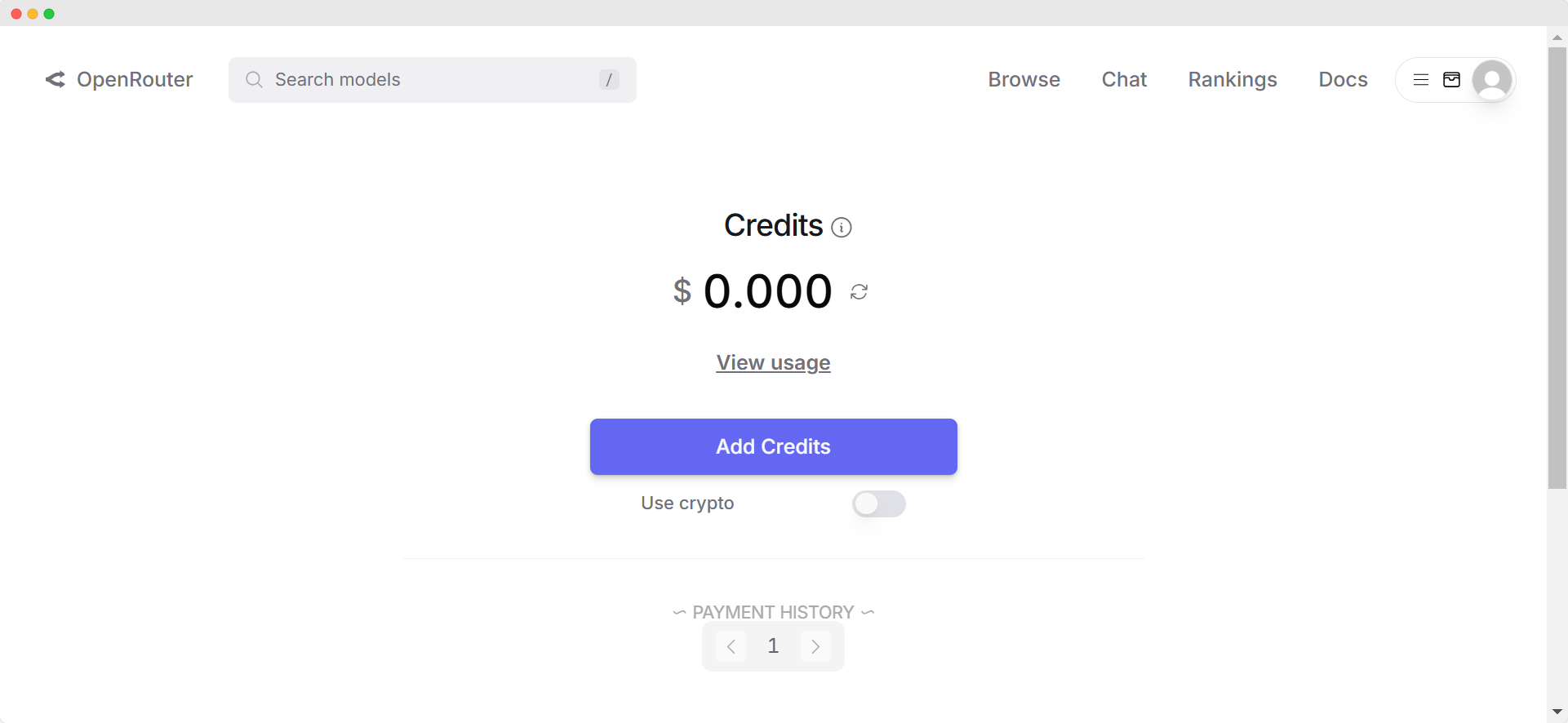

To add credits to your account, visit https://openrouter.ai/account#credits, click “Add Credits“, and then enter your billing information following the on-screen instructions.

If you only plan to use free AI models, you can skip this section.

However, for certain free AI models that charge no input or output token fees, OpenRouter may still charge you for infrastructure usage costs.

This is because, even though the AI model itself is free, the underlying infrastructure still incurs costs.

How much should you top-up?

In my experience, you can start using specific AI models even if they require a fee by adding a small amount to your balance. A $5 top-up is usually sufficient, especially if you plan to experiment with different AI models on OpenRouter.

To add funds to your balance, you can use a credit card, online debit card, or cryptocurrency (with a 5% fee applied to crypto transactions).

A Simple Quick Start Guide for OpenRouter

You can start using OpenRouter by accessing its API using Python as follows:

import requests

import json

response = requests.post(

url="https://openrouter.ai/api/v1/chat/completions",

headers={

"Authorization": f"Bearer {OPENROUTER_API_KEY}",

"HTTP-Referer": f"{YOUR_SITE_URL}", # Optional, for including your app on openrouter.ai rankings.

"X-Title": f"{YOUR_APP_NAME}", # Optional. Shows in rankings on openrouter.ai.

},

data=json.dumps({

"model": "openai/o1-preview", # Optional

"messages": [

{ "role": "user", "content": "What is the meaning of life?" }

]

})

)Using OpenRouter API with OpenAI’s Client

You can use OpenRouter in conjunction with the client API provided by OpenAI.

from openai import OpenAI

from os import getenv

# gets API Key from environment variable OPENAI_API_KEY

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key=getenv("OPENROUTER_API_KEY"),

)

completion = client.chat.completions.create(

extra_headers={

"HTTP-Referer": $YOUR_SITE_URL, # Optional, for including your app on openrouter.ai rankings.

"X-Title": $YOUR_APP_NAME, # Optional. Shows in rankings on openrouter.ai.

},

model="openai/o1-preview",

messages=[

{

"role": "user",

"content": "Say this is a test",

},

],

)

print(completion.choices[0].message.content)Using OpenRouter API with LiteLLM

LiteLLM is compatible with all text, chat, and vision models provided by OpenRouter. Additionally, you can use OpenRouter with LiteLLM in the following ways:

import os

from litellm import completion

os.environ["OPENROUTER_API_KEY"] = ""

os.environ["OR_SITE_URL"] = "" # optional

os.environ["OR_APP_NAME"] = "" # optional

response = completion(

model="openai/o1-preview",

messages=messages,

)LiteLLM is compatible with all OpenRouter models. To use it with your OpenRouter model, send the request with the model number specified as openrouter/<your-openrouter-model>, e.g. nousresearch/hermes-3-llama-3.1-405b:free.

| Model Name | Function Call |

|---|---|

| openrouter/openai/gpt-3.5-turbo | completion('openrouter/openai/gpt-3.5-turbo', messages) |

| openrouter/openai/gpt-3.5-turbo-16k | completion('openrouter/openai/gpt-3.5-turbo-16k', messages) |

| openrouter/openai/gpt-4 | completion('openrouter/openai/gpt-4', messages) |

| openrouter/openai/gpt-4-32k | completion('openrouter/openai/gpt-4-32k', messages) |

| openrouter/anthropic/claude-2 | completion('openrouter/anthropic/claude-2', messages) |

| openrouter/anthropic/claude-instant-v1 | completion('openrouter/anthropic/claude-instant-v1', messages) |

| openrouter/google/palm-2-chat-bison | completion('openrouter/google/palm-2-chat-bison', messages) |

| openrouter/google/palm-2-codechat-bison | completion('openrouter/google/palm-2-codechat-bison', messages) |

| openrouter/meta-llama/llama-2-13b-chat | completion('openrouter/meta-llama/llama-2-13b-chat', messages) |

| openrouter/meta-llama/llama-2-70b-chat | completion('openrouter/meta-llama/llama-2-70b-chat', messages) |

For full documentation on how to use OpenRouter API with LiteLLM, please refer to this official LiteLLM documentation.

Using OpenRouter with LangChain in Python

OpenRouter has implemented an API compatible with OpenAI, which allows for easy integration with LangChain’s ChatOpenAI class in a Large Language Model (LLM) application.

To begin, the required dependency needs to be installed using pip:

pip install langchain

pip install openaiWe will create our own ChatOpenRouter class by inheriting from the ChatOpenAI class:

from langchain_community.chat_models import ChatOpenAI

class ChatOpenRouter(ChatOpenAI):

openai_api_base: str

openai_api_key: str

model_name: str

def __init__(self,

model_name: str,

openai_api_key: Optional[str] = None,

openai_api_base: str = "https://openrouter.ai/api/v1",

**kwargs):

openai_api_key = openai_api_key or os.getenv('OPENROUTER_API_KEY')

super().__init__(openai_api_base=openai_api_base,

openai_api_key=openai_api_key,

model_name=model_name, **kwargs)We can now use the free nousresearch/hermes-3-llama-3.1-405b:free AI model as follows:

from langchain_core.prompts import ChatPromptTemplate

llm = ChatOpenRouter(

model_name="nousresearch/hermes-3-llama-3.1-405b:free"

)

prompt = ChatPromptTemplate.from_template("explain {topic} in one sentence")

openrouter_chain = prompt | llm

print(openrouter_chain.invoke({"topic": "Large Language Models"}))And the output would be:

Large Language Models are AI systems trained on vast amounts of text data to understand, generate, and manipulate human-like language.Finding Free AI Models to Use at OpenRouter

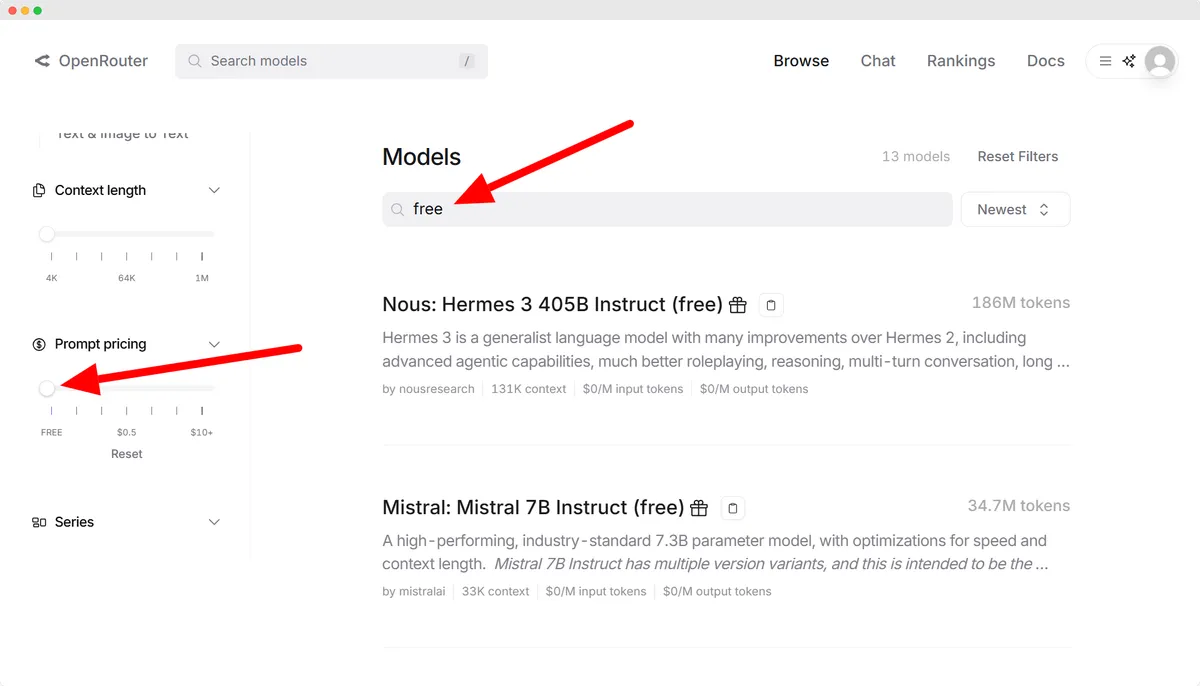

As previously mentioned, OpenRouter offers access to a wide range of AI models, including free and open-source options such as DeepSeek and Gemini AI, with a single API key.

For users unfamiliar with OpenRouter, finding free AI models might seem challenging. However, the process is straightforward.

How to Create an Audiobook App Using Python and Streamlit

How to Create an Audiobook App Using Python and Streamlit – I often listen to podcasts and ... Read More

To locate free models, visit OpenRouter’s Models page and search for models using the “free” keyword, or navigate to the Prompt pricing section, scroll it to the left until you reach the options marked as “FREE” as shown in the following screenshot:

To make it much easier for you, here’s the free AI models to use that I’ve collected:

google/gemini-2.5-pro-exp-03-25:free

google/gemma-3-1b-it:free

google/gemma-3-4b-it:free

google/gemma-3-12b-it:free

google/gemma-3-27b-it:free

google/gemini-2.0-flash-lite-preview-02-05:free

google/gemini-2.0-pro-exp-02-05:free

google/gemini-2.0-flash-thinking-exp:free

google/gemini-2.0-flash-thinking-exp-1219:free

google/gemini-2.0-flash-exp:free

google/learnlm-1.5-pro-experimental:free

google/gemini-flash-1.5-8b-exp

google/gemma-2-9b-it:free

deepseek/deepseek-chat-v3-0324:free

deepseek/deepseek-r1-zero:free

deepseek/deepseek-r1-distill-llama-70b:free

deepseek/deepseek-r1:free

deepseek/deepseek-chat:free

meta-llama/llama-3.3-70b-instruct:free

meta-llama/llama-3.2-3b-instruct:free

meta-llama/llama-3.2-1b-instruct:free

meta-llama/llama-3.2-11b-vision-instruct:free

meta-llama/llama-3.1-8b-instruct:free

meta-llama/llama-3-8b-instruct:free

qwen/qwen2.5-vl-3b-instruct:free

qwen/qwen2.5-vl-32b-instruct:free

qwen/qwq-32b:free

qwen/qwen2.5-vl-72b-instruct:free

deepseek/deepseek-r1-distill-qwen-32b:free

deepseek/deepseek-r1-distill-qwen-14b:free

qwen/qwq-32b-preview:free

qwen/qwen-2.5-coder-32b-instruct:free

qwen/qwen-2.5-72b-instruct:free

qwen/qwen-2.5-vl-7b-instruct:free

qwen/qwen-2-7b-instruct:free

allenai/molmo-7b-d:free

bytedance-research/ui-tars-72b:free

featherless/qwerky-72b:free

mistralai/mistral-small-3.1-24b-instruct:free

open-r1/olympiccoder-7b:free

open-r1/olympiccoder-32b:free

rekaai/reka-flash-3:free

moonshotai/moonlight-16b-a3b-instruct:free

nousresearch/deephermes-3-llama-3-8b-preview:free

cognitivecomputations/dolphin3.0-r1-mistral-24b:free

cognitivecomputations/dolphin3.0-mistral-24b:free

mistralai/mistral-small-24b-instruct-2501:free

sophosympatheia/rogue-rose-103b-v0.2:free

nvidia/llama-3.1-nemotron-70b-instruct:free

mistralai/mistral-nemo:free

mistralai/mistral-7b-instruct:free

microsoft/phi-3-mini-128k-instruct:free

microsoft/phi-3-medium-128k-instruct:free

openchat/openchat-7b:free

undi95/toppy-m-7b:free

huggingfaceh4/zephyr-7b-beta:free

gryphe/mythomax-l2-13b:freeUsing OpenRouter’s Chat Playground and LLM Desktop Apps Instead of Coding

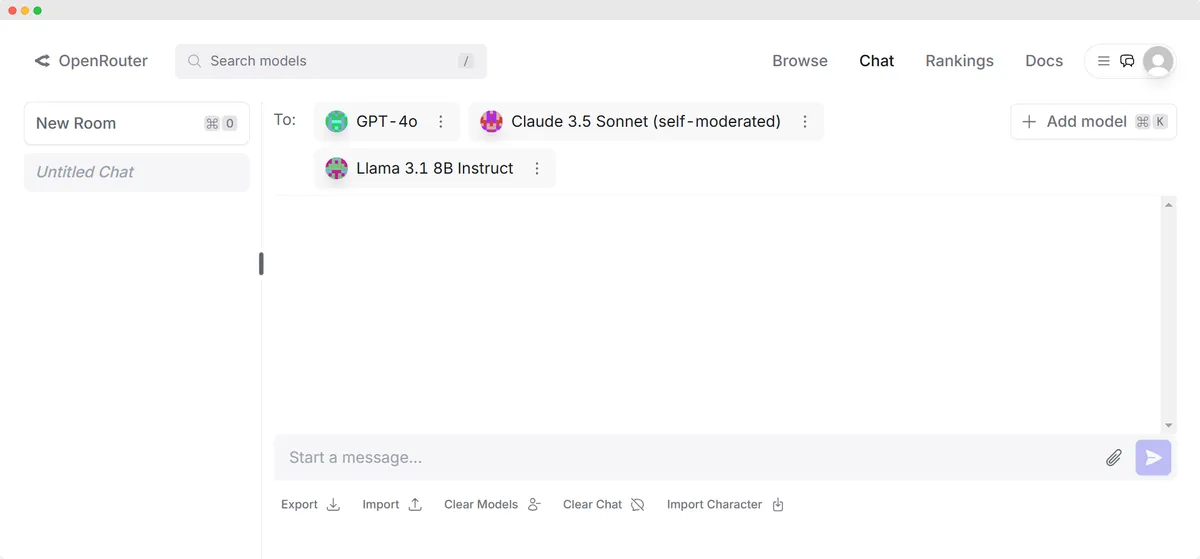

If coding is not a solution for you to get started with OpenRouter, you can always use the OpenRouter’s Chat Playground.

Or if you prefer a desktop app with better UI, here’s my favorites:

Create a Simple AI Translator Web App with OpenRouter API, Python and Streamlit

Now it’s time for the most exciting part. Let’s create a functional application using OpenRouter API, which provides direct and repeated access to available AI models, both free and paid.

We will use Streamlit to create a web app with a user-friendly interface that can be run locally in a browser.

What We’ll Need

Before we start, here are the key libraries and tools I used for this project:

- Streamlit: A Python library to quickly create web apps.

- requests: For making API requests to OpenRouter.

- dotenv: To manage environment variables, like the API key.

You can install these using the following command:

pip install streamlit requests python-dotenv

Setting Up the Environment

First, install python-dotenv to manage the environment variables. This way, we could keep our OpenRouter API key secure and avoid hardcoding it directly into the script.

I stored my API key in a .env file and fill it like this:

OPENROUTER_API_KEY=your_openrouter_api_key

I then loaded this environment variable in the Python script:

import os

from dotenv import load_dotenv

# Load environment variables

load_dotenv()

# Get API key from environment variable

OPENROUTER_API_KEY = os.getenv('OPENROUTER_API_KEY')

This ensures that my API key is safely stored and easily accessible whenever I need it.

Interacting with OpenRouter API

To interact with OpenRouter, I created a function that handles the API request. This function sends the text to be translated, along with the source and target languages, to OpenRouter, which then returns the translated text.

Here’s how I wrote the function:

import requests

import json

def translate_text(text, source_lang, target_lang, model):

response = requests.post(

url="https://openrouter.ai/api/v1/chat/completions",

headers={

"Authorization": f"Bearer {OPENROUTER_API_KEY}",

"HTTP-Referer": REFERRER,

"X-Title": APP_NAME,

},

data=json.dumps({

"model": model,

"messages": [

{

"role": "system",

"content": f"You are a translator. Translate the given text from {source_lang} to {target_lang}. Only provide the translation, no additional explanations."

},

{

"role": "user",

"content": text

}

]

})

)

if response.status_code == 200:

return response.json()['choices'][0]['message']['content']

else:

return f"Error: {response.status_code}, {response.text}"

This function sends a POST request to the OpenRouter API with the following information:

- The text to be translated.

- The source and target languages.

- The AI model chosen by the user.

The function checks for a successful response and returns the translated text. If there’s an error, it returns the error message and status code for debugging.

Building the Web Interface with Streamlit

Now, for the fun part—creating the web interface with Streamlit! I chose Streamlit because it makes it really easy to build interactive web applications without needing to deal with HTML, CSS, or JavaScript. Everything happens in Python.

Here’s how I built the interface:

1. Input Area for Text

I wanted users to be able to paste or type text into a large text area. Streamlit’s st.text_area() was perfect for this:

input_text = st.text_area("Enter text to translate:", height=150)

This allows users to input text that they want to translate.

2. Language Selection

Next, I added two dropdowns for users to select the source and target languages. Streamlit’s st.selectbox() made this super simple:

col1, col2 = st.columns(2)

with col1:

source_lang = st.selectbox("Source Language", ["English", "Spanish", "French", "German", "Chinese", "Japanese"])

with col2:

target_lang = st.selectbox("Target Language", ["Spanish", "English", "French", "German", "Chinese", "Japanese"])

This code splits the UI into two columns—one for the source language and the other for the target language.

3. Selecting an AI Model

The OpenRouter API provides access to various free and paid AI models. I allowed users to select which model they wanted to use for the translation:

# Free AI models

AI_MODELS = [

"google/gemini-2.5-pro-exp-03-25:free",

"google/gemma-3-1b-it:free",

"google/gemma-3-4b-it:free",

"google/gemma-3-12b-it:free",

"google/gemma-3-27b-it:free",

"google/gemini-2.0-flash-lite-preview-02-05:free",

"google/gemini-2.0-pro-exp-02-05:free",

"google/gemini-2.0-flash-thinking-exp:free",

"google/gemini-2.0-flash-thinking-exp-1219:free",

"google/gemini-2.0-flash-exp:free",

"google/learnlm-1.5-pro-experimental:free",

"google/gemini-flash-1.5-8b-exp",

"google/gemma-2-9b-it:free",

"deepseek/deepseek-chat-v3-0324:free",

"deepseek/deepseek-r1-zero:free",

"deepseek/deepseek-r1-distill-llama-70b:free",

"deepseek/deepseek-r1:free",

"deepseek/deepseek-chat:free",

"meta-llama/llama-3.3-70b-instruct:free",

"meta-llama/llama-3.2-3b-instruct:free",

"meta-llama/llama-3.2-1b-instruct:free",

"meta-llama/llama-3.2-11b-vision-instruct:free",

"meta-llama/llama-3.1-8b-instruct:free",

"meta-llama/llama-3-8b-instruct:free",

"qwen/qwen2.5-vl-3b-instruct:free",

"qwen/qwen2.5-vl-32b-instruct:free",

"qwen/qwq-32b:free",

"qwen/qwen2.5-vl-72b-instruct:free",

"deepseek/deepseek-r1-distill-qwen-32b:free",

"deepseek/deepseek-r1-distill-qwen-14b:free",

"qwen/qwq-32b-preview:free",

"qwen/qwen-2.5-coder-32b-instruct:free",

"qwen/qwen-2.5-72b-instruct:free",

"qwen/qwen-2.5-vl-7b-instruct:free",

"qwen/qwen-2-7b-instruct:free",

"allenai/molmo-7b-d:free",

"bytedance-research/ui-tars-72b:free",

"featherless/qwerky-72b:free",

"mistralai/mistral-small-3.1-24b-instruct:free",

"open-r1/olympiccoder-7b:free",

"open-r1/olympiccoder-32b:free",

"rekaai/reka-flash-3:free",

"moonshotai/moonlight-16b-a3b-instruct:free",

"nousresearch/deephermes-3-llama-3-8b-preview:free",

"cognitivecomputations/dolphin3.0-r1-mistral-24b:free",

"cognitivecomputations/dolphin3.0-mistral-24b:free",

"mistralai/mistral-small-24b-instruct-2501:free",

"sophosympatheia/rogue-rose-103b-v0.2:free",

"nvidia/llama-3.1-nemotron-70b-instruct:free",

"mistralai/mistral-nemo:free",

"mistralai/mistral-7b-instruct:free",

"microsoft/phi-3-mini-128k-instruct:free",

"microsoft/phi-3-medium-128k-instruct:free",

"openchat/openchat-7b:free",

"undi95/toppy-m-7b:free",

"huggingfaceh4/zephyr-7b-beta:free",

"gryphe/mythomax-l2-13b:free"

]This dropdown lets users pick which AI model will handle the translation task. Different models might yield different results, so it’s interesting to see how each one performs.

4. The Translate Button

Once the text, languages, and model are selected, users can click the “Translate” button to trigger the translation process. Here’s the code for that:

if st.button("Translate"):

if input_text and source_lang != target_lang:

with st.spinner("Translating..."):

translated_text = translate_text(input_text, source_lang, target_lang, selected_model)

st.subheader("Translated Text:")

st.write(translated_text)

elif source_lang == target_lang:

st.warning("Please select different languages for source and target.")

else:

st.warning("Please enter some text to translate.")

When the user clicks the button, the app checks if:

- There is some text entered for translation.

- The source and target languages are different.

If all conditions are met, the app shows a spinner while the translation is processed. Once completed, it displays the translated text.

Sidebar for Additional Information

In the sidebar, I added some extra information about the OpenRouter API and the models being used:

st.sidebar.markdown("This translator uses the OpenRouter API to access various AI models for translation.")

st.sidebar.markdown("Different models may have different capabilities and performance characteristics.")

This helps users understand what’s happening behind the scenes and gives them an idea of how different models might behave.

Running the App

To run the app locally, I used the following command:

streamlit run app.py

Streamlit opens the app in the browser, and from there, I could paste text, choose languages, select an AI model, and get a translation almost instantly.

Putting It All Together

Here’s the complete AI Translator Python script we’ve built:

import streamlit as st

import requests

import json

import os

from dotenv import load_dotenv

# Load environment variables

load_dotenv()

# Get API key from environment variable

OPENROUTER_API_KEY = os.getenv('OPENROUTER_API_KEY')

REFERRER = "https://walterpinem.com/" # Replace with your actual site URL

APP_NAME = "Simple AI Translator"

# Free AI models

AI_MODELS = [

"google/gemini-2.5-pro-exp-03-25:free",

"google/gemma-3-1b-it:free",

"google/gemma-3-4b-it:free",

"google/gemma-3-12b-it:free",

"google/gemma-3-27b-it:free",

"google/gemini-2.0-flash-lite-preview-02-05:free",

"google/gemini-2.0-pro-exp-02-05:free",

"google/gemini-2.0-flash-thinking-exp:free",

"google/gemini-2.0-flash-thinking-exp-1219:free",

"google/gemini-2.0-flash-exp:free",

"google/learnlm-1.5-pro-experimental:free",

"google/gemini-flash-1.5-8b-exp",

"google/gemma-2-9b-it:free",

"deepseek/deepseek-chat-v3-0324:free",

"deepseek/deepseek-r1-zero:free",

"deepseek/deepseek-r1-distill-llama-70b:free",

"deepseek/deepseek-r1:free",

"deepseek/deepseek-chat:free",

"meta-llama/llama-3.3-70b-instruct:free",

"meta-llama/llama-3.2-3b-instruct:free",

"meta-llama/llama-3.2-1b-instruct:free",

"meta-llama/llama-3.2-11b-vision-instruct:free",

"meta-llama/llama-3.1-8b-instruct:free",

"meta-llama/llama-3-8b-instruct:free",

"qwen/qwen2.5-vl-3b-instruct:free",

"qwen/qwen2.5-vl-32b-instruct:free",

"qwen/qwq-32b:free",

"qwen/qwen2.5-vl-72b-instruct:free",

"deepseek/deepseek-r1-distill-qwen-32b:free",

"deepseek/deepseek-r1-distill-qwen-14b:free",

"qwen/qwq-32b-preview:free",

"qwen/qwen-2.5-coder-32b-instruct:free",

"qwen/qwen-2.5-72b-instruct:free",

"qwen/qwen-2.5-vl-7b-instruct:free",

"qwen/qwen-2-7b-instruct:free",

"allenai/molmo-7b-d:free",

"bytedance-research/ui-tars-72b:free",

"featherless/qwerky-72b:free",

"mistralai/mistral-small-3.1-24b-instruct:free",

"open-r1/olympiccoder-7b:free",

"open-r1/olympiccoder-32b:free",

"rekaai/reka-flash-3:free",

"moonshotai/moonlight-16b-a3b-instruct:free",

"nousresearch/deephermes-3-llama-3-8b-preview:free",

"cognitivecomputations/dolphin3.0-r1-mistral-24b:free",

"cognitivecomputations/dolphin3.0-mistral-24b:free",

"mistralai/mistral-small-24b-instruct-2501:free",

"sophosympatheia/rogue-rose-103b-v0.2:free",

"nvidia/llama-3.1-nemotron-70b-instruct:free",

"mistralai/mistral-nemo:free",

"mistralai/mistral-7b-instruct:free",

"microsoft/phi-3-mini-128k-instruct:free",

"microsoft/phi-3-medium-128k-instruct:free",

"openchat/openchat-7b:free",

"undi95/toppy-m-7b:free",

"huggingfaceh4/zephyr-7b-beta:free",

"gryphe/mythomax-l2-13b:free"

]

# Function to translate text

def translate_text(text, source_lang, target_lang, model):

response = requests.post(

url="https://openrouter.ai/api/v1/chat/completions",

headers={

"Authorization": f"Bearer {OPENROUTER_API_KEY}",

"HTTP-Referer": REFERRER,

"X-Title": APP_NAME,

},

data=json.dumps({

"model": model,

"messages": [

{

"role": "system",

"content": f"You are a translator. Translate the given text from {source_lang} to {target_lang}. Only provide the translation, no additional explanations."

},

{

"role": "user",

"content": text

}

]

})

)

if response.status_code == 200:

return response.json()['choices'][0]['message']['content']

else:

return f"Error: {response.status_code}, {response.text}"

# Streamlit UI

st.title("AI Translator")

# Input text area

input_text = st.text_area("Enter text to translate:", height=150)

# Language selection

col1, col2 = st.columns(2)

with col1:

# Add more languages as needed

source_lang = st.selectbox("Source Language", ["English", "Spanish", "French", "German", "Chinese", "Japanese"])

with col2:

# Add more languages as needed

target_lang = st.selectbox("Target Language", ["Spanish", "English", "French", "German", "Chinese", "Japanese"])

# Model selection

selected_model = st.selectbox("Select AI Model", AI_MODELS)

# Translate button

if st.button("Translate"):

if input_text and source_lang != target_lang:

with st.spinner("Translating..."):

translated_text = translate_text(input_text, source_lang, target_lang, selected_model)

st.subheader("Translated Text:")

st.write(translated_text)

elif source_lang == target_lang:

st.warning("Please select different languages for source and target.")

else:

st.warning("Please enter some text to translate.")

# Add a note about the API usage

st.sidebar.markdown("This translator uses the OpenRouter API to access various AI models for translation.")

st.sidebar.markdown("Different models may have different capabilities and performance characteristics.")

st.sidebar.markdown("For more info, you can read [**this tutorial**](https://walterpinem.com/getting-started-with-openrouter/).")This simple AI translator app was developed using the OpenRouter API and demonstrates how to work with multiple AI models, especially the free ones.

Check it on Github repository.

The app’s user interface was created with Streamlit, and API requests were handled using requests. This combination made the project enjoyable and practical.

One of the benefits of this app is its potential for expansion. Users can add more languages, experiment with different models, or incorporate other AI tasks.

This project may inspire others to create their own AI-powered applications using OpenRouter and Streamlit.

That’s all, folks! Hope you find this article interesting. Feel free to explore OpenRouter and what it offers, extend the AI Translator app as needed, and don’t forget to share this article to your friends!