How to Create an XML Sitemap for SEO With Python – As a seasoned SEO professional and someone who’s learning Python, I frequently encounter the need to create XML sitemaps for websites I am involved in optimizing, especially for custom-made or static HTML sites, where automation is necessary to save time.

An XML sitemap is a fundamental element in search engine optimization (SEO), as it enables search engines such as Google to better comprehend the website’s structure and crawl it with greater efficiency.

In this article, I will provide a step-by-step guide to generating an XML sitemap using a simple Python script from a list of URLs.

How to Create an XML Sitemap for SEO with Python

Always ensure that you have the necessary permissions to use and distribute any content generated or modified by scripts. This tutorial is provided for educational purposes.

Why an XML Sitemap Matters for SEO

Before diving into the code, let’s briefly discuss why an XML sitemap is important:

- Improved Crawl Efficiency: Sitemaps inform search engines about pages on your site that might not be discoverable through normal crawling.

- Metadata Inclusion: You can provide additional information like the last modification date, which can influence how search engines prioritize crawling.

- Large Sites Benefit: For large websites with complex structures, sitemaps are essential to ensure all pages are indexed.

The Python Script for XML Sitemap Generator

Below is the Python script we’ll be using:

import datetime

def generate_sitemap(urls):

today_date = datetime.datetime.now().strftime('%Y-%m-%dT%H:%M:%S+00:00')

sitemap = '''<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">'''

for url in urls:

sitemap += f'''

<url>

<loc>{url}</loc>

<lastmod>{today_date}</lastmod>

</url>'''

sitemap += '''

</urlset>'''

return sitemap

def main():

with open('urls.txt', 'r', encoding='utf-8') as file:

urls = [line.strip() for line in file if line.strip()]

sitemap = generate_sitemap(urls)

with open('sitemap.xml', 'w', encoding='utf-8') as file:

file.write(sitemap)

print("Sitemap generated and saved as sitemap.xml")

if __name__ == "__main__":

main()

The urls.txt File

The script expects a file named urls.txt in the same directory, containing one URL per line:

https://www.example.com/

https://www.example.com/about

https://www.example.com/contact

Step-by-Step Explanation

Let’s break down the script to understand how it works.

Importing the Necessary Module

import datetime

We import the datetime module to get the current date and time, which we’ll use in the sitemap to indicate when each URL was last modified.

Defining the generate_sitemap Function

def generate_sitemap(urls):

today_date = datetime.datetime.now().strftime('%Y-%m-%dT%H:%M:%S+00:00')

sitemap = '''<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">'''

...

return sitemap

today_date: Gets the current date and time in the ISO 8601 format, which is standard for sitemaps.sitemap: Initializes the sitemap string with the XML declaration and the opening<urlset>tag, including the namespace.

Looping Through the URLs

for url in urls:

sitemap += f'''

<url>

<loc>{url}</loc>

<lastmod>{today_date}</lastmod>

</url>'''

For each URL in the list:

- We append an

<url>entry to the sitemap. <loc>: Specifies the URL location.<lastmod>: Indicates the last modification date of the URL.

Closing the Sitemap

sitemap += '''

</urlset>'''

We close the <urlset> tag to complete the XML structure.

Returning the Sitemap

return sitemap

The function returns the complete sitemap string.

The main Function

def main():

with open('urls.txt', 'r', encoding='utf-8') as file:

urls = [line.strip() for line in file if line.strip()]

...

- Reading URLs: Opens

urls.txtand reads all non-empty lines into a list calledurls. - Stripping Lines: Uses

line.strip()to remove any leading or trailing whitespace.

Generating and Saving the Sitemap

sitemap = generate_sitemap(urls)

with open('sitemap.xml', 'w', encoding='utf-8') as file:

file.write(sitemap)

print("Sitemap generated and saved as sitemap.xml")

- Generating: Calls

generate_sitemap(urls)to create the sitemap content. - Writing to File: Opens

sitemap.xmlfor writing and saves the sitemap content. - Confirmation: Prints a message indicating that the sitemap has been generated.

Entry Point Check

if __name__ == "__main__":

main()

This ensures that the main function runs only when the script is executed directly, not when it’s imported as a module.

How to Use the Python Script for Generating the XML Sitemap

It’s really easy to use this script, and its result is very fast also.

1. Prepare Your urls.txt File

Create a text file named urls.txt in the same directory as your script. List all the URLs you want to include in your sitemap, one per line:

https://www.yourwebsite.com/

https://www.yourwebsite.com/blog

https://www.yourwebsite.com/contact

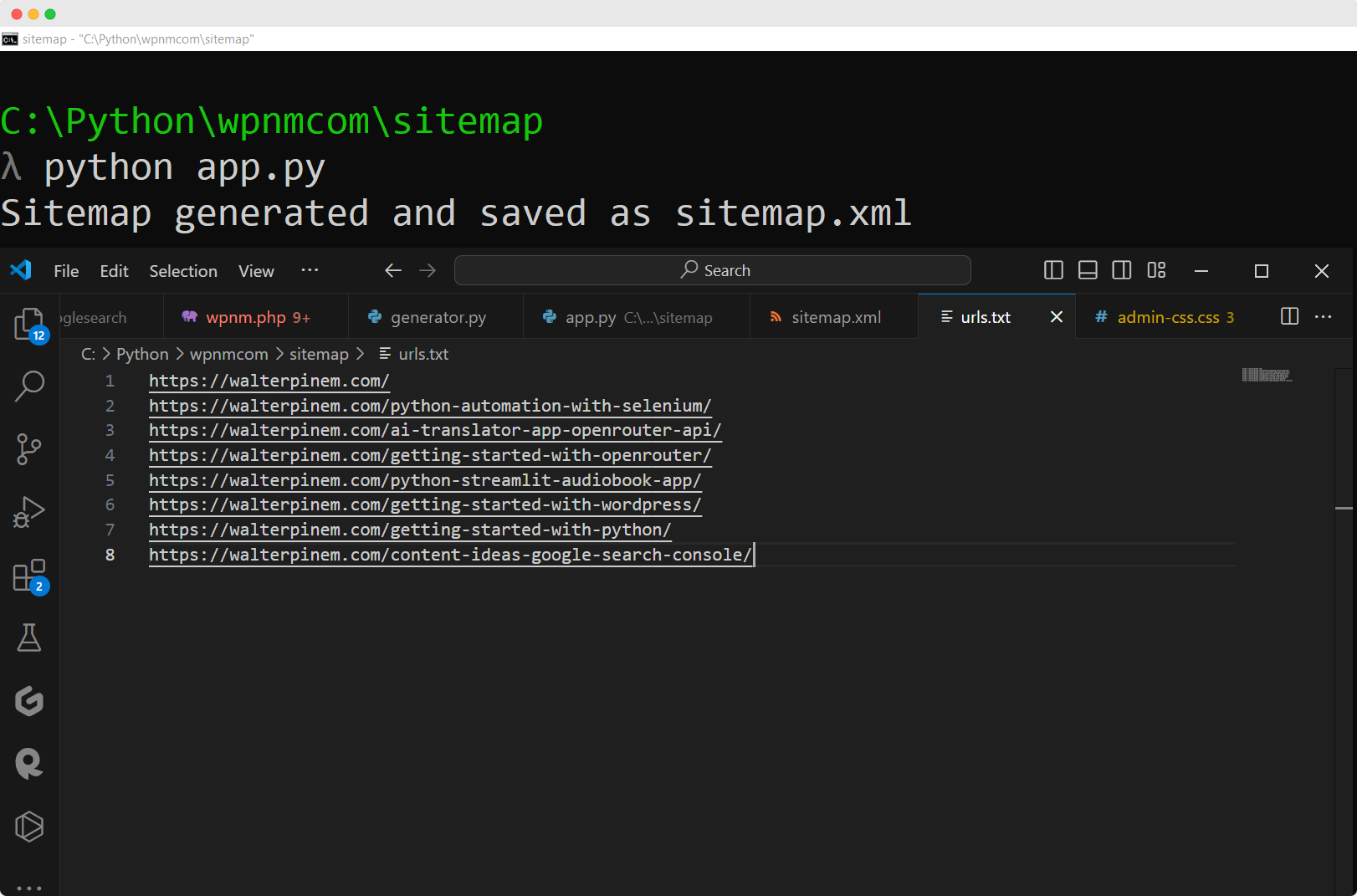

2. Run the Script

Open your terminal or command prompt, navigate to the directory containing the script, and run:

python sitemap_generator.py

Replace sitemap_generator.py with the name you’ve given to your script file.

3. Verify the Output

After running the script, you should see:

Sitemap generated and saved as sitemap.xml

Check the directory for a new file named sitemap.xml. Open it to verify the contents.

4. Submit Your Sitemap to Search Engines

Upload the sitemap.xml file to the root directory of your website. Then, submit it through Google Search Console or Bing Webmaster Tools to help search engines crawl your site more effectively.

Customizing the Python Script

Here’s ways on how you can customize the script to match with your needs:

Adding Priority and Change Frequency

If you want to include additional metadata like <priority> or <changefreq>, you can modify the generate_sitemap function:

def generate_sitemap(urls):

...

for url in urls:

sitemap += f'''

<url>

<loc>{url}</loc>

<lastmod>{today_date}</lastmod>

<changefreq>weekly</changefreq>

<priority>0.8</priority>

</url>'''

...

Handling Different Last Modified Dates

If different URLs have different last modified dates, you can adjust the script to read dates from the urls.txt file:

Updating urls.txt Format

https://www.yourwebsite.com/,2023-10-01

https://www.yourwebsite.com/blog,2023-10-05

https://www.yourwebsite.com/contact,2023-09-28

Modifying the Script

def main():

with open('urls.txt', 'r', encoding='utf-8') as file:

urls = []

for line in file:

if line.strip():

url, date = line.strip().split(',')

urls.append((url, date))

sitemap = generate_sitemap(urls)

...

And adjust the generate_sitemap function accordingly:

def generate_sitemap(urls):

...

for url, date in urls:

sitemap += f'''

<url>

<loc>{url}</loc>

<lastmod>{date}T00:00:00+00:00</lastmod>

</url>'''

...

Error Handling and Validation

For a production-ready script, consider adding error handling:

- File Not Found: Check if

urls.txtexists. - Invalid URL Format: Validate URLs before including them.

- Date Format: Ensure dates are in the correct format.

Example of adding URL validation:

import re

def is_valid_url(url):

regex = re.compile(

r'^(https?://)' # http:// or https://

r'(\w+\.)?' # Optional subdomain

r'[\w\-]+\.\w+' # Domain

r'(/\S*)?$' # Optional path

)

return re.match(regex, url) is not None

Then, incorporate it into your URL reading logic:

if is_valid_url(url):

urls.append(url)

else:

print(f"Invalid URL skipped: {url}")

Final Words

Creating an XML sitemap doesn’t have to be a daunting task. With a simple Python script, you can automate the process and ensure your website is easily crawlable by search engines.

This not only saves time but also enhances your site’s SEO performance.

Feel free to customize and expand upon this script to suit your specific needs. Happy coding!