Building an AI-Powered SEO Content Brief Tool Using SerpApi & OpenAI – Optimizing content for both humans and search engines is essential.

To achieve this, content creators and SEO specialists are constantly seeking ways to automate tasks such as keyword research and content outline creation.

This article provides a step-by-step guide on how to build a content brief tool powered by artificial intelligence using SerpApi for keyword research and OpenAI for generating content briefs.

We will walk through the entire Python code process, from collecting Google SERP data using SerpApi to using OpenAI to generate structured content briefs. This guide aims to help you understand how each component works and how they fit together.

TL;DR

Hide- Building an AI-powered SEO content brief tool using SerpApi and OpenAI automates keyword research and content planning, saving time and resources

- The tool uses SerpApi to fetch real-time SEO data such as autocomplete suggestions, related searches, and commonly asked questions from Google, ensuring content aligns with current trends

- SerpApi automation significantly reduces manual effort, providing insights for creating highly relevant and targeted content, making SEO more efficient and effective

- Combining SerpApi with GPT-4 for content generation saves hours of manual research and planning, producing optimized and well-structured content briefs quickly and efficiently

- The tool allows users to input SEO keywords, select AI providers, and choose language, country, and domain preferences, providing flexibility in generating content briefs

- Streamlit provides a user-friendly interface for users to interact with the tool, displaying results in markdown format and SERP data in a tabular format using Pandas

- Users can refine the AI prompt to achieve desired results and modify the code to fit specific requirements, adding or removing AI models and providers as needed

- SerpApi's real-time data directly from Google ensures content is optimized based on current trends and search results, providing a more comprehensive keyword research and improving SEO strategy

Why Build an AI SEO Content Brief Tool?

Creating a content brief manually can take hours, especially when gathering data such as keyword suggestions, related questions, and topic ideas from search engines.

An automated tool can expedite this process by fetching keyword data and then using AI to generate a well-structured content outline and suggestions. This saves time and ensures your content aligns with current SEO trends and best practices.

There’s a pip library called seo-keyword-research-tool, a Python SEO keywords suggestion tool that pulls data from Google Autocomplete, People Also Ask and Related Searches.

From its Github documentation, we can use it like the following:

from SeoKeywordResearch import SeoKeywordResearch

keyword_research = SeoKeywordResearch(

query='starbucks coffee',

api_key='<your_serpapi_api_key>',

lang='en',

country='us',

domain='google.com'

)

auto_complete_results = keyword_research.get_auto_complete()

related_searches_results = keyword_research.get_related_searches()

related_questions_results = keyword_research.get_related_questions()

data = {

'auto_complete': auto_complete_results,

'related_searches': related_searches_results,

'related_questions': related_questions_results

}

keyword_research.save_to_json(data)

keyword_research.print_data(data)And it will yield the following JSON output:

{

"auto_complete": [

"starbucks coffee menu",

"starbucks coffee cups",

"starbucks coffee sizes",

"starbucks coffee mugs",

"starbucks coffee gear",

"starbucks coffee beans",

"starbucks coffee near me",

"starbucks coffee traveler"

],

"related_searches": [

"starbucks near me",

"starbucks coffee price",

"starbucks coffee beans",

"starbucks company",

"starbucks coffee menu",

"starbucks merchandise",

"starbucks coffee bags"

],

"related_questions": [

"What is the most popular Starbucks coffee?",

"What is the number 1 Starbucks drink?",

"What is the Tiktok coffee from Starbucks?",

"Why is Starbucks coffee so famous?"

]

}The keywords and questions are clearly extracted and presented in a readable JSON format.

Rather than using the raw data as it is, we can connect it with LLMs, depending on the models and providers you select, to create a detailed SEO Content Brief for our SEO needs.

So let’s get started.

Prerequisites

Make sure you have the following before starting with the code:

- Python 3.x installed on your system.

- SerpApi API key for fetching Google SERP data.

- OpenAI API key to connect with OpenAI’s AI models including the latest GPT-4.5 API.

- Groq API key to connect with open-source LLMs using Groq.

- OpenRouter API key to connect with a vast options of LLMs.

- Basic understanding of Python and web scraping concepts.

For SerpApi, Groq, and OpenRouter, I have created a comprehensive article for each, which you can read:

Getting Started With Google SERP Scraping With SerpApi

Scraping Google SERPs becomes effortless with SERPapi, but mastering its potential ... Read More

Getting Started with Groq: Building and Deploying AI Models

Getting Started with Groq – The combination of the Groq API and Llama 3.3 offers a unique ... Read More

Getting Started with OpenRouter

Getting Started with OpenRouter – OpenRouter is a middleware platform that provides access to ... Read More

Step 1: Setting Up Your Python Environment

The first step is to set up your Python environment. You’ll need to install the necessary packages, such as openai and any custom modules you create (like SeoKeywordResearch in this case).

pip install openai groq requests seo-keyword-research-toolYou can also install other packages, such as json, collections, or any other libraries that your code will need.

Step 2: Importing Necessary Modules

Start by importing the required modules for handling API interactions and data manipulation:

import json

from collections import Counter

from SeoKeywordResearch import SeoKeywordResearch

from openai import OpenAI

json: Used to read and write data in JSON format.collections.Counter: Helps us count word frequencies for keyword extraction.SeoKeywordResearch: A custom class to interact with SerpApi and fetch relevant SEO data.openai: Python package to connect to the OpenAI API for generating content.

Step 3: Initializing the OpenAI Client (or Groq or OpenRouter)

Fortunately, both Groq and OpenRouter are compatible with OpenAI’s client API.

You’ll need to initialize the OpenAI client using your API key:

client = OpenAI(api_key = 'sk-123')

Make sure to replace sk-123 with your actual OpenAI API key.

If you want to use Groq instead, use this instead:

client = Groq(

api_key='gsk_123',

)Then replace the gsk_123 with your actual Groq API key.

If you want to use OpenRouter, change it with this:

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key='sk-or-v1-123',

)Then change the sk-or-v1-123 with your actual OpenRouter API key.

Step 4: Fetching Google SERP Data Using SerpApi

The fetch_serp_data Function

This function takes in a query and uses SerpApi to retrieve data such as autocomplete results, related searches, and related questions. You can adjust the language, country, and domain for more localized search results.

def fetch_serp_data(query, api_key, lang='en', country='us', domain='google.com'):

keyword_research = SeoKeywordResearch(

query=query,

api_key=api_key,

lang=lang,

country=country,

domain=domain

)

auto_complete_results = keyword_research.get_auto_complete()

related_searches_results = keyword_research.get_related_searches()

related_questions_results = keyword_research.get_related_questions()

data = {

'auto_complete': auto_complete_results,

'related_searches': related_searches_results,

'related_questions': related_questions_results

}

return data

Explanation:

SeoKeywordResearch: This custom class is assumed to handle communication with SerpApi.- Autocomplete Results: These are suggestions you see when typing into Google’s search bar.

- Related Searches: Common searches related to the primary query.

- Related Questions: These typically show up as “People also ask” in SERPs, and are useful for targeting long-tail keywords.

query: The SEO keyword or topic you’re researching.api_key: Your SerpApi key, which provides access to the data.lang,country,domain: Optional parameters to specify the search settings (e.g., language, country, and Google domain).

This function returns a dictionary containing:

- Auto-complete suggestions: Common phrases users type when searching for the keyword.

- Related searches: Search terms related to the primary keyword.

- Related questions: Frequently asked questions about the topic.

This function consolidates all of this data into a dictionary (data), which can later be used for content brief generation.

Step 5: Processing SERP Data and Identifying Top Keywords

After fetching the SERP data, we need to process it and identify the most common keywords to target.

Word Frequency Counting with Counter

We use the Counter class from Python’s collections module to count the occurrences of keywords, filtering out common stop words such as “the,” “is,” and “and.”

# Combine all keywords and questions

all_keywords = auto_complete + related_searches + related_questions

# Find most common words (excluding stop words)

stop_words = set(['the', 'a', 'an', 'in', 'on', 'at', 'for', 'to', 'of', 'and', 'is', 'are'])

word_count = Counter(word.lower() for phrase in all_keywords for word in phrase.split() if word.lower() not in stop_words)

top_keywords = [word for word, count in word_count.most_common(5)]

Explanation:

all_keywords: We combine autocomplete suggestions, related searches, and related questions into one list.- Stop Words: These are common words that do not provide significant SEO value. We exclude them to focus on meaningful keywords.

word_count: This variable counts the frequency of each word across all keywords.top_keywords: Extracts the top 5 most frequently occurring keywords.

This list of keywords will form the foundation of our content brief.

Step 6: Generating the Content Brief Using OpenAI

Now that we have the SEO data, the next step is to create a content brief using OpenAI’s GPT-4 model. OpenAI can take structured input and generate human-readable content in a variety of formats. In this case, we are instructing the model to create a detailed content brief based on the extracted SEO data.

The generate_content_brief Function

This function takes in the SERP data, prepares a prompt for GPT-4, and then calls OpenAI’s API to generate the content brief.

def generate_content_brief(serp_data):

# Extract relevant information from SERP data

auto_complete = serp_data['auto_complete']

related_searches = serp_data['related_searches']

related_questions = serp_data['related_questions']

# Combine all keywords and questions

all_keywords = auto_complete + related_searches + related_questions

# Find most common words (excluding stop words)

stop_words = set(['the', 'a', 'an', 'in', 'on', 'at', 'for', 'to', 'of', 'and', 'is', 'are'])

word_count = Counter(word.lower() for phrase in all_keywords for word in phrase.split() if word.lower() not in stop_words)

top_keywords = [word for word, count in word_count.most_common(5)]

# Prepare prompt for GPT-4

prompt = f"""

Create a content brief for an article about Starbucks coffee. Use the following information:

Top keywords: {', '.join(top_keywords)}

Key questions to address:

{' '.join(related_questions)}

Related topics:

{' '.join(related_searches)}

The content brief should include:

1. Suggested title

2. Target keyword

3. Article outline: Generate a comprehensive outline for the blog post/article.

4. Key points to cover in each section

5. Suggested word count

6. Meta description

"""

Explanation:

- Prompt Construction: The prompt is carefully designed to include the top keywords, related questions, and related searches. It instructs OpenAI to generate a detailed content brief, including a suggested title, outline, and meta description.

- LLM API Call: The

client.chat.completions.createfunction calls GPT-4 (or any other AI model ID) to generate the content brief based on the structured prompt. - Combining Keywords: The function first combines all the keywords from the different SERP results (auto-complete, related searches, related questions).

- Keyword Analysis: It then filters out common stop words and uses

Counterto find the top 5 most common keywords. - OpenAI Response: The generated content brief includes a suggested title, target keyword, an outline, key points, and a meta description for the article.

Step 7: Main Execution Block

Finally, we combine everything in the main block, where we fetch the SERP data, save it, generate the content brief, and save the result.

if __name__ == "__main__":

serp_api_key = '123'

query = 'keyword clustering in SEO'

serp_data = fetch_serp_data(query, serp_api_key)

with open('keyword_clustering_in_seo.json', 'w') as f:

json.dump(serp_data, f, indent=2)

print("SERP data saved to keyword_clustering_in_seo.json")

content_brief = generate_content_brief(serp_data)

print("\nGenerated Content Brief:")

print(content_brief)

with open('keyword_clustering_in_seo.txt', 'w') as f:

f.write(content_brief)

print("\nContent brief saved to keyword_clustering_in_seo.txt")

- Fetching SERP Data: Calls

fetch_serp_data()to gather relevant SERP data for the query'keyword clustering in SEO'. - Saving SERP Data: The results are saved to a JSON file (

keyword_clustering_in_seo.json) for future reference. - Generating Content Brief: Uses the

generate_content_brief()function to create a detailed SEO content brief based on the fetched SERP data. - Saving the Brief: The content brief is saved to a text file (

keyword_clustering_in_seo.txt).

Step 8: Putting It All Together and Running the Code

Finally, we bring everything together in the main.py script.

import json

from collections import Counter

from SeoKeywordResearch import SeoKeywordResearch

from openai import OpenAI

# Uncomment the following line if you want to use Groq

# from groq import Groq

# Initialize OpenAI client

client = OpenAI(

api_key = 'sk-123'

)

# Initialize for Groq: Uncomment if you want to use Groq

# client = Groq(

# api_key='gsk_123',

# )

# Initialize for OpenRouter: Uncomment if you want to use OpenRouter

# client = OpenAI(

# base_url="https://openrouter.ai/api/v1",

# api_key='sk-or-v1-123',

# )

# Set your OpenAI API key in your environment variables or directly here

def fetch_serp_data(query, api_key, lang='en', country='us', domain='google.com'):

keyword_research = SeoKeywordResearch(

query=query,

api_key=api_key,

lang=lang,

country=country,

domain=domain

)

auto_complete_results = keyword_research.get_auto_complete()

related_searches_results = keyword_research.get_related_searches()

related_questions_results = keyword_research.get_related_questions()

data = {

'auto_complete': auto_complete_results,

'related_searches': related_searches_results,

'related_questions': related_questions_results

}

return data

def generate_content_brief(serp_data):

# Extract relevant information from SERP data

auto_complete = serp_data['auto_complete']

related_searches = serp_data['related_searches']

related_questions = serp_data['related_questions']

# Combine all keywords and questions

all_keywords = auto_complete + related_searches + related_questions

# Find most common words (excluding stop words)

stop_words = set(['the', 'a', 'an', 'in', 'on', 'at', 'for', 'to', 'of', 'and', 'is', 'are'])

word_count = Counter(word.lower() for phrase in all_keywords for word in phrase.split() if word.lower() not in stop_words)

top_keywords = [word for word, count in word_count.most_common(5)]

# Prepare prompt for GPT-4

prompt = f"""

Create a content brief for an article about Starbucks coffee. Use the following information:

Top keywords: {', '.join(top_keywords)}

Key questions to address:

{' '.join(related_questions)}

Related topics:

{' '.join(related_searches)}

The content brief should include:

1. Suggested title

2. Target keyword

3. Article outline: Generate a comprehensive outline for the blog post/article.

4. Key points to cover in each section

5. Suggested word count

6. Meta description

"""

# Generate content brief using GPT-4

response = client.chat.completions.create(

model="gpt-4o-mini", # Change this model ID to fit your needs, whether using OpenAI, Groq, or OpenRouter

messages=[

{"role": "system", "content": "You are a helpful SEO content strategist."},

{"role": "user", "content": prompt}

]

)

return response.choices[0].message.content

# Main execution

if __name__ == "__main__":

# Set your SERP API key

serp_api_key = '123'

# Fetch SERP data

query = 'keyword clustering in SEO'

serp_data = fetch_serp_data(query, serp_api_key)

# Save SERP data to a JSON file

with open('keyword_clustering_in_seo.json', 'w') as f:

json.dump(serp_data, f, indent=2)

print("SERP data saved to keyword_clustering_in_seo.json")

# Generate content brief

content_brief = generate_content_brief(serp_data)

print("\nGenerated Content Brief:")

print(content_brief)

# Save the content brief to a file

with open('keyword_clustering_in_seo.txt', 'w') as f:

f.write(content_brief)

print("\nContent brief saved to keyword_clustering_in_seo.txt")Don’t forget to change the query/keyword, saved file name, AI models, providers, etc.

Here’s what happens in the main execution block:

- Fetch SERP Data: The script fetches data for the given query.

- Save SERP Data: It saves this data into a JSON file for later reference.

- Generate Content Brief: The script generates a content brief using OpenAI’s GPT-4 (or any AI models of your choice) based on the fetched SERP data.

- Save Content Brief: It saves the generated content brief into a text file.

If you save the script into a main.py file, then just run this command in your terminal:

py main.pyThen, the result in the terminal will be something like this:

Pretty cool, right?

The scraped Google SERPs also extracted and saved into the keyword_clustering_in_seo.json file, as you can see in the terminal.

Integrating streamlit with Our AI SEO Content Brief Tool

If running the tool and get the result directly in the terminal is not fun enough to you, let’s create a web UI for it using Streamlit.

With Streamlit, we can also make every choice, including Google Search parameters such as language, country, domain, as well as AI models and providers can be selected dynamically instead of hardcoding them in the script.

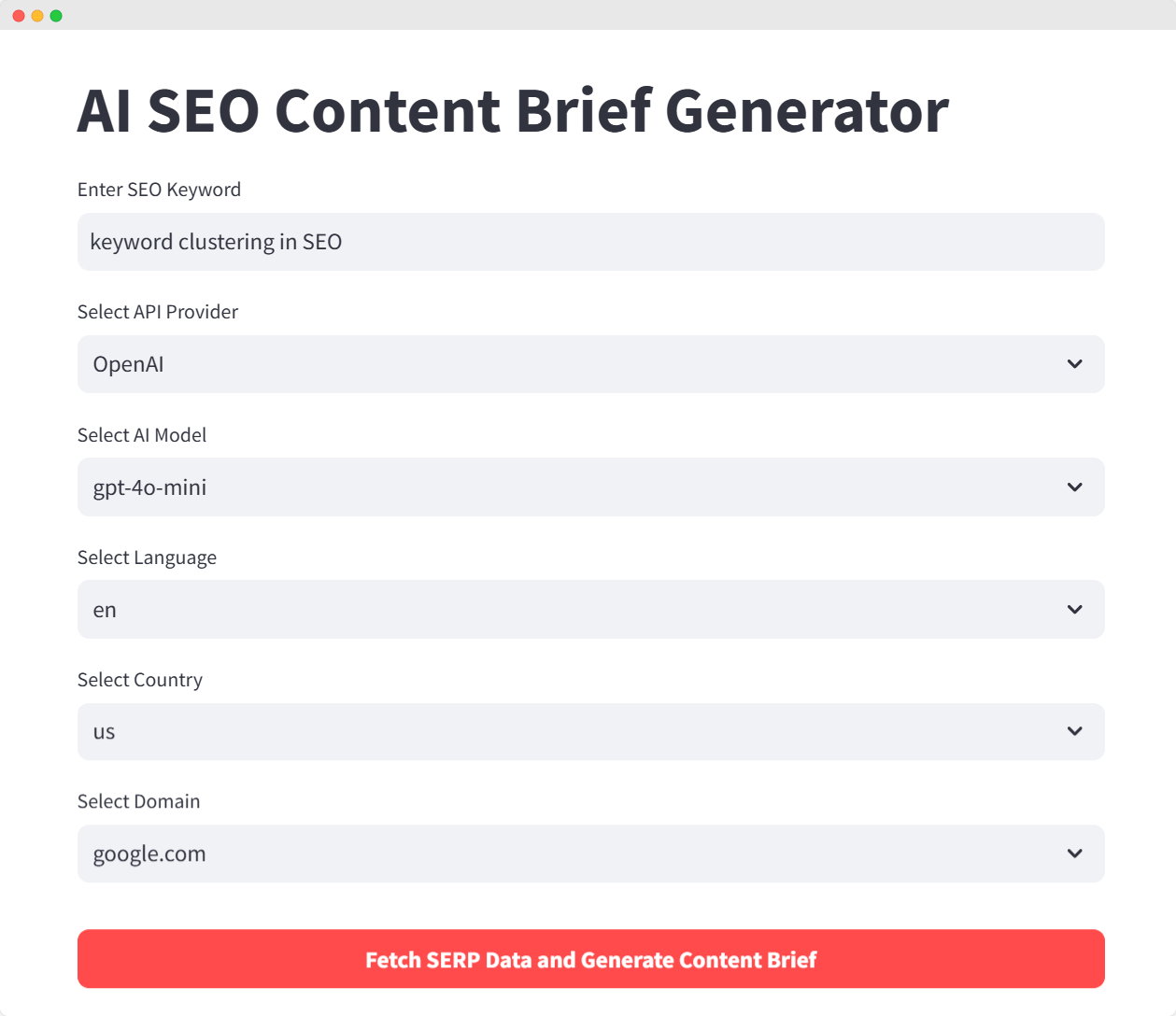

What we’re gonna build is a Streamlit web UI like this:

Let’s break down the code, understand how it works, and explore how each piece fits together to create this tool.

Before we go over the code, let’s briefly outline the main technologies we’ll use to create this tool. Each of these tools is essential for developing this SEO content brief generator.

Setting Up the Python and Streamlit Environment

Before starting with the code, we need to set up our environment. In this section, we will explain how to install the required libraries and set up environment variables for API keys.

1. Installing Required Libraries

To begin, install all the necessary dependencies using the following command:

pip install streamlit openai groq requests seo-keyword-research-tool python-dotenv pandas

This command will install the following libraries:

- Streamlit: For building the web interface.

- OpenAI: To interface with the OpenAI GPT models.

- Groq: For interfacing with Groq’s AI models.

- Requests: For making API calls.

- seo-keyword-research-tool: To pull keyword data from SerpApi.

- Python-dotenv: To load API keys securely from environment variables.

- Pandas: For handling and displaying tabular data in Streamlit.

2. Loading Environment Variables

We store API keys securely using environment variables. This allows us to separate sensitive information from the main codebase.

from dotenv import load_dotenv

import os

# Load environment variables from .env file

load_dotenv()

# Define the API keys

openai_api_key = os.getenv("OPENAI_API_KEY")

groq_api_key = os.getenv("GROQ_API_KEY")

openrouter_api_key = os.getenv("OPENROUTER_API_KEY")

serp_api_key = os.getenv("SERPAPI_API_KEY")

Here’s how the .env file might look:

OPENAI_API_KEY=your-openai-key

GROQ_API_KEY=your-groq-key

OPENROUTER_API_KEY=your-openrouter-key

SERPAPI_API_KEY=your-serpapi-key

This makes your API keys secure and keeps them out of the code repository.

Fetching SERP Data Using SerpApi

Now that the environment is set up, the next step is to gather search engine data. In this section, we will use SerpApi to pull keyword suggestions and related search terms.

1. Fetching SEO Keyword Data

We use SerpApi to dynamically fetch SEO data such as auto-complete keywords, related searches, and related questions. This data will be the foundation of the content brief that our AI models will generate.

def fetch_serp_data(query, api_key, lang='en', country='us', domain='google.com'):

keyword_research = SeoKeywordResearch(

query=query,

api_key=api_key,

lang=lang,

country=country,

domain=domain

)

auto_complete_results = keyword_research.get_auto_complete()

related_searches_results = keyword_research.get_related_searches()

related_questions_results = keyword_research.get_related_questions()

data = {

'auto_complete': auto_complete_results,

'related_searches': related_searches_results,

'related_questions': related_questions_results

}

return data

Explanation:

The fetch_serp_data() function takes the following arguments:

query: The SEO keyword or phrase.api_key: Your SerpApi API key.lang: The language for the search results.country: The country for the search engine.domain: The Google domain (e.g.,google.com,google.co.uk, etc.).

This function collects three key types of SEO data:

- Auto-complete results: Common keywords suggested by Google.

- Related searches: Search terms related to the main keyword.

- Related questions: Frequently asked questions from the SERP.

2. Example of SERP Data

The function returns the data in the following structure:

{

"auto_complete": ["keyword clustering tool", "keyword clustering algorithm"],

"related_searches": ["seo keyword grouping", "how to cluster keywords"],

"related_questions": ["What is keyword clustering?", "Why is keyword clustering important for SEO?"]

}Generating SEO Content Brief with OpenAI, Groq, and OpenRouter

Once we’ve gathered the SERP data, the next step is to generate an AI-powered SEO content brief. This section explains how we use AI models like OpenAI, Groq, and OpenRouter to generate content based on the gathered keywords.

1. Initializing the AI Client

We provide the flexibility to choose between OpenAI, Groq, and OpenRouter for generating the content brief. The initialize_client() function selects the appropriate API and initializes the client based on the user’s choice.

def initialize_client(api, model_selection, api_key):

if api == "OpenAI":

return OpenAI(api_key=openai_api_key)

elif api == "Groq":

return Groq(api_key=groq_api_key)

else: # For OpenRouter

return OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key=openrouter_api_key

)

Explanation:

- The user can select one of the three AI platforms.

- Based on the selection, the appropriate client is initialized:

- For OpenAI, we use OpenAI’s API directly.

- For Groq, the Groq client is initialized.

- For OpenRouter, we use the OpenAI client with a custom base URL (

https://openrouter.ai/api/v1).

2. Generating the SEO Content Brief

The generate_content_brief() function sends a prompt to the selected AI model and generates the content brief. This is the core of the tool’s functionality.

def generate_content_brief(query, serp_data, client, model_selection):

auto_complete = serp_data['auto_complete']

related_searches = serp_data['related_searches']

related_questions = serp_data['related_questions']

all_keywords = auto_complete + related_searches + related_questions

stop_words = set(['the', 'a', 'an', 'in', 'on', 'at', 'for', 'to', 'of', 'and', 'is', 'are'])

word_count = Counter(word.lower() for phrase in all_keywords for word in phrase.split() if word.lower() not in stop_words)

top_keywords = [word for word, count in word_count.most_common(5)]

prompt = f"""

Create a content brief for an article about {query}. Use the following information:

Top keywords: {', '.join(top_keywords)}

Key questions to address:

{' '.join(related_questions)}

Related topics:

{' '.join(related_searches)}

The content brief should include:

1. Suggested title

2. Target keyword

3. Article outline: Generate a comprehensive outline for the blog post/article.

4. Key points to cover in each section

5. Suggested word count

6. Meta description

"""

response = client.chat.completions.create(

model=model_selection,

messages=[

{"role": "system", "content": "You are a helpful SEO content strategist."},

{"role": "user", "content": prompt}

]

)

return response.choices[0].message.content

Explanation:

- The function takes the following inputs:

query: The SEO keyword.serp_data: The keyword data fetched using SerpApi.client: The AI client initialized earlier.model_selection: The AI model selected by the user.

- Top keywords are generated by counting the most frequently occurring words in the combined keyword data (

auto_complete,related_searches, andrelated_questions). - A detailed prompt is created that instructs the AI to generate an SEO content brief, including:

- Suggested title

- Target keyword

- Article outline

- Key points to cover

- Suggested word count

- Meta description

- The AI’s response is returned as the content brief.

Displaying Data in Streamlit

Now that the content brief has been generated, the next step is to display the results in the Streamlit interface. This section walks through how the results are presented to the user, including a table of SERP data and the generated content brief.

1. User Inputs in Streamlit

We allow users to input the SEO keyword, select the AI provider, and choose language, country, and domain preferences. Here’s how the user inputs are handled:

def main():

st.title("AI SEO Content Brief Generator")

# Inputs

query = st.text_input("Enter SEO Keyword", "keyword clustering in SEO", placeholder="e.g. keyword clustering in SEO")

model_options = {

"OpenAI": ["gpt-4o", "gpt-4o-mini", "o1-preview", "o1-mini"],

"Groq": ["llama-3.1-70b-versatile", "llama-3.1-8b-instant"],

"OpenRouter": ["nousresearch/hermes-3-llama-3.1-405b:free", "google/gemma-2-9b-it:free", "mistralai/mistral-7b-instruct:free"]

}

selected_api = st.selectbox("Select API Provider", ["OpenAI", "Groq", "OpenRouter"])

model_selection = st.selectbox("Select AI Model", model_options[selected_api])

lang = st.selectbox("Select Language", ['en','id', 'es', 'fr', 'de', 'it'])

country = st.selectbox("Select Country", ['us','id', 'uk', 'de', 'fr', 'es'])

domain = st.selectbox("Select Domain", ['google.com', 'google.co.id', 'google.co.in', 'google.co.uk', 'google.de', 'google.es', 'google.fr'])

Explanation:

- Users input the SEO keyword they want to target.

- They can select the AI platform (OpenAI, Groq, or OpenRouter) and the specific model they want to use.

- Additionally, users can choose language, country, and domain preferences for the Google search results.

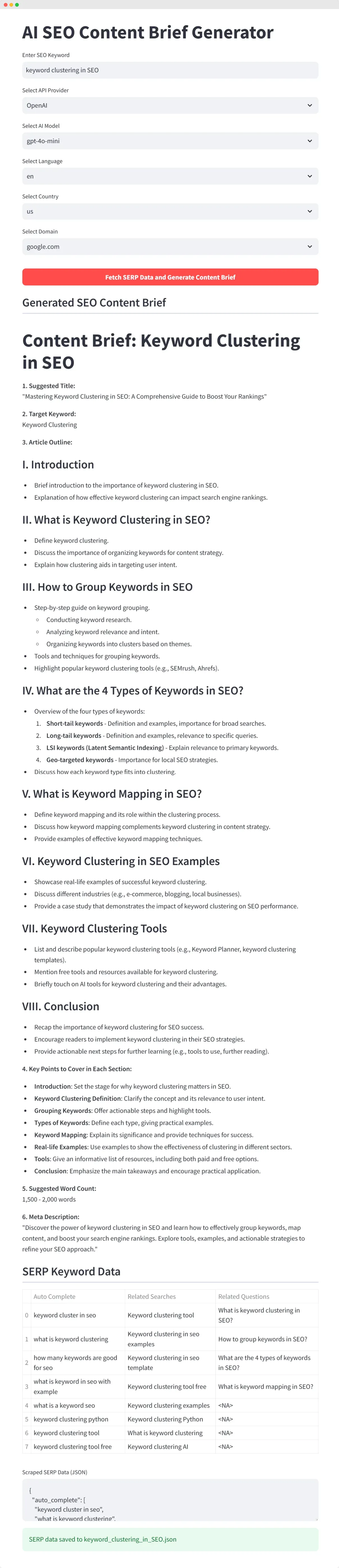

2. Displaying the Content Brief and SERP Data

Once the content brief is generated, it’s displayed in markdown format, and the SERP data is shown in a tabular format using Pandas.

if st.button("Fetch SERP Data and Generate Content Brief", type="primary"):

serp_data = fetch_serp_data(query, serp_api_key, lang=lang, country=country, domain=domain)

# Initialize AI client based on selection

client = initialize_client(selected_api, model_selection, os.getenv(f"{selected_api.upper()}_API_KEY"))

content_brief = generate_content_brief(query, serp_data, client, model_selection)

# Display the content brief in markdown

st.subheader("Generated SEO Content Brief", divider="gray")

st.markdown(content_brief)

# Convert JSON to table format using pandas

serp_df = json_to_dataframe(serp_data)

st.subheader("SERP Keyword Data", divider="gray")

st.table(serp_df)

# Save SERP data as JSON and show it in the plain text area

serp_json = json.dumps(serp_data, indent=2)

st.text_area("Scraped SERP Data (JSON)", serp_json)

# Save to a file

with open(f"{query.replace(' ', '_')}.json", 'w') as f:

json.dump(serp_data, f, indent=2)

st.success(f"SERP data saved to {query.replace(' ', '_')}.json")

Explanation:

When the button Fetch SERP Data and Generate Content Brief is clicked, the following steps happen:

- The SERP data is fetched.

- The content brief is generated using the selected AI model.

- The generated content brief is displayed in markdown format.

- The SERP data is displayed as a table using Pandas.

- The SERP data is also saved as a JSON file for later use.

Putting the Streamlit AI SEO Content Brief App Script Together

Now, here’s the complete code:

# pip install streamlit openai groq requests seo-keyword-research-tool python-dotenv pandas

import os

from dotenv import load_dotenv

import json

import streamlit as st

from collections import Counter

from SeoKeywordResearch import SeoKeywordResearch

from openai import OpenAI

from groq import Groq

import pandas as pd

# Load environment variables from .env file

load_dotenv()

# Define the API keys

openai_api_key = os.getenv("OPENAI_API_KEY")

groq_api_key = os.getenv("GROQ_API_KEY")

openrouter_api_key = os.getenv("OPENROUTER_API_KEY")

serp_api_key = os.getenv("SERPAPI_API_KEY")

# Helper function to initialize the selected AI client

def initialize_client(api, model_selection, api_key):

if api == "OpenAI":

return OpenAI(api_key=openai_api_key)

elif api == "Groq":

return Groq(api_key=groq_api_key)

else: # For OpenRouter

return OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key=openrouter_api_key

)

# Function to fetch SERP data dynamically

def fetch_serp_data(query, api_key, lang='en', country='us', domain='google.com'):

keyword_research = SeoKeywordResearch(

query=query,

api_key=api_key,

lang=lang,

country=country,

domain=domain

)

auto_complete_results = keyword_research.get_auto_complete()

related_searches_results = keyword_research.get_related_searches()

related_questions_results = keyword_research.get_related_questions()

data = {

'auto_complete': auto_complete_results,

'related_searches': related_searches_results,

'related_questions': related_questions_results

}

return data

# Function to generate content brief

def generate_content_brief(query, serp_data, client, model_selection):

auto_complete = serp_data['auto_complete']

related_searches = serp_data['related_searches']

related_questions = serp_data['related_questions']

all_keywords = auto_complete + related_searches + related_questions

stop_words = set(['the', 'a', 'an', 'in', 'on', 'at', 'for', 'to', 'of', 'and', 'is', 'are'])

word_count = Counter(word.lower() for phrase in all_keywords for word in phrase.split() if word.lower() not in stop_words)

top_keywords = [word for word, count in word_count.most_common(5)]

prompt = f"""

Create a content brief for an article about {query}. Use the following information:

Top keywords: {', '.join(top_keywords)}

Key questions to address:

{' '.join(related_questions)}

Related topics:

{' '.join(related_searches)}

The content brief should include:

1. Suggested title

2. Target keyword

3. Article outline: Generate a comprehensive outline for the blog post/article.

4. Key points to cover in each section

5. Suggested word count

6. Meta description

"""

response = client.chat.completions.create(

model=model_selection,

messages=[

{"role": "system", "content": "You are a helpful SEO content strategist."},

{"role": "user", "content": prompt}

]

)

return response.choices[0].message.content

# Function to convert JSON data to a Pandas DataFrame for tabular display

def json_to_dataframe(serp_data):

auto_complete_df = pd.DataFrame(serp_data['auto_complete'], columns=['Auto Complete'])

related_searches_df = pd.DataFrame(serp_data['related_searches'], columns=['Related Searches'])

related_questions_df = pd.DataFrame(serp_data['related_questions'], columns=['Related Questions'])

# Combine into a single dataframe with three columns

combined_df = pd.concat([auto_complete_df, related_searches_df, related_questions_df], axis=1)

return combined_df

# Streamlit interface

def main():

st.title("AI SEO Content Brief Generator")

# Inputs

query = st.text_input("Enter SEO Keyword", "keyword clustering in SEO", placeholder="e.g. keyword clustering in SEO")

model_options = {

"OpenAI": ["gpt-4o", "gpt-4o-mini", "o1-preview", "o1-mini"],

"Groq": ["llama-3.1-70b-versatile", "llama-3.1-8b-instant"],

"OpenRouter": ["nousresearch/hermes-3-llama-3.1-405b:free", "google/gemma-2-9b-it:free", "mistralai/mistral-7b-instruct:free"]

}

selected_api = st.selectbox("Select API Provider", ["OpenAI", "Groq", "OpenRouter"])

model_selection = st.selectbox("Select AI Model", model_options[selected_api])

lang = st.selectbox("Select Language", ['en','id', 'es', 'fr', 'de', 'it'])

country = st.selectbox("Select Country", ['us','id', 'uk', 'de', 'fr', 'es'])

domain = st.selectbox("Select Domain", ['google.com', 'google.co.id', 'google.co.in', 'google.co.uk', 'google.de', 'google.es', 'google.fr'])

# Generate SERP data

st.markdown(

"""

<style>

button {

width:100%!important;

}

button p {

font-weight: bold!important;

}

</style>

""",

unsafe_allow_html=True,

)

if st.button( "Fetch SERP Data and Generate Content Brief", type="primary"):

serp_data = fetch_serp_data(query, serp_api_key, lang=lang, country=country, domain=domain)

# Initialize AI client based on selection

client = initialize_client(selected_api, model_selection, os.getenv(f"{selected_api.upper()}_API_KEY"))

content_brief = generate_content_brief(query, serp_data, client, model_selection)

# Display the content brief in markdown

st.subheader("Generated SEO Content Brief", divider="gray")

st.markdown(content_brief)

# Convert JSON to table format using pandas

serp_df = json_to_dataframe(serp_data)

st.subheader("SERP Keyword Data", divider="gray")

st.table(serp_df)

# Save SERP data as JSON and show it in the plain text area

serp_json = json.dumps(serp_data, indent=2)

st.text_area("Scraped SERP Data (JSON)", serp_json)

# Save to a file

with open(f"{query.replace(' ', '_')}.json", 'w') as f:

json.dump(serp_data, f, indent=2)

st.success(f"SERP data saved to {query.replace(' ', '_')}.json")

if __name__ == "__main__":

main()When the button Fetch SERP Data and Generate Content Brief is clicked, the Streamlit app will first scrape the Google SERP data with SerpApi, and then analyze it with selected AI model, and present the data like the following:

We have created a tool that generates a detailed briefing report for search engine optimization (SEO) content, using SerpApi, OpenAI, Groq, and Streamlit.

This report combines real-time search results data with insights from advanced AI models to provide actionable recommendations for SEO.

The tool automates SEO content planning by bringing together keyword data from SerpApi and AI-driven analysis from OpenAI, Groq, and OpenRouter. Streamlit provides a user-friendly interface for users to interact with the tool.

This tool is useful for marketers, SEO specialists, and businesses aiming to enhance their content marketing strategies.

You can modify and extend the code as needed to fit your specific requirements.

You can refine the AI prompt to achieve the desired result. Additionally, you have the option to include more AI model IDs from providers like OpenAI, Groq, and OpenRouter, or selectively add and remove models to suit your preferences.

Benefits of Using SerpApi for SEO

Using SerpApi for SEO offers several key advantages.

First, it provides real-time, accurate data from Google, ensuring that your content is optimized based on current trends and search results.

The ability to fetch autocomplete suggestions, related searches, and commonly asked questions allows for more comprehensive keyword research, improving your SEO strategy.

- How to Create an Audiobook App Using Python and Streamlit

- How I Find Content Ideas With Google Search Console

- Build a Domain Age Checker API with FastAPI and Python

- FastAPI Tutorial: Build APIs with Python in Minutes

- Create an XML Sitemap With Python (With Sample Code)

- Create an AI Translator App with OpenRouter API, Python, and Streamlit

Additionally, SerpApi’s automation significantly reduces manual effort, saving time and resources, while delivering insights that help in creating highly relevant and targeted content. This ensures a more efficient and effective approach to SEO.

Accurate and Fresh Data

SerpApi provides real-time data directly from Google, ensuring that the content you produce aligns with current search trends.

Time-Saving Automation

By automating keyword research and integrating it with GPT-4 for content generation, this tool saves hours of manual research and planning.

Enhanced SEO Strategy

SerpApi helps uncover related searches, autocomplete suggestions, and common questions, providing a more holistic view of the SEO landscape. Combined with GPT-4, it helps create optimized, well-structured content briefs tailored to specific SEO goals.

Putting It All Together

Building an AI SEO content brief tool using SerpApi and OpenAI allows you to automate time-consuming aspects of SEO research and content planning.

By combining powerful SERP data extraction with AI-driven content generation, this tool helps create optimized, structured, and high-quality content briefs quickly and efficiently.

With this guide, you now have the knowledge to build and customize your own AI-powered SEO tools. Start by experimenting with different search queries and content formats to discover the full potential of this approach!