Getting Started with Groq – The combination of the Groq API and Llama 3.3 offers a unique approach to creating and deploying machine learning models.

Groq provides a powerful platform that efficiently handles complex AI tasks using its high-performance AI accelerators, while Llama 3.3, a state-of-the-art language model, utilizes this platform to deliver robust natural language processing solutions.

This guide provides a step-by-step approach to using the Groq API with Llama 3.3, guiding users through setting up their environment and deploying their first model.

What is Groq?

Groq is an open-source, Python-based framework that simplifies the process of querying and manipulating complex graph data structures.

It allows developers to easily perform various operations on graph data, including querying, filtering, and aggregating.

Groq’s capabilities are particularly valuable when working with large-scale graph data, such as social networks, knowledge graphs, and recommender systems.

The framework is designed with three key objectives: to provide a clear and expressive query language, exceptionally high performance, and seamless scalability.

While OpenRouter and Kluster.ai act as the platforms or marketplaces for both free and paid AI models available in the market, Groq provides ultra fast engine to try open source AI models.

The Power of Groq with Its Fast AI Inference

Groq is set to disrupt the AI hardware landscape, as it emerges with its groundbreaking Language Processing Unit (LPU) chip. This innovative chip is capable of processing a remarkable 500 tokens per second, aiming to set new benchmarks for AI performance.

The founders of Groq are experienced professionals in the AI and machine learning field. Notable among them is Jonathan Ross, co-founder of the Tensor Processing Unit (TPU) at Google, a key component in accelerating machine learning. Under his leadership, the company is poised to make a significant impact in the industry.

Key Features of Groq

Groq offers several features that make it a powerful tool for working with graphs.

- Groq’s declarative query language is simple and SQL-like, allowing developers to specify what data they want to retrieve from a graph without needing to define how to retrieve it.

- This query language supports graph pattern matching, which enables developers to search for subgraphs that match a specific pattern.

- Additionally, Groq allows for graph filtering and aggregation, making it easy to perform tasks like computing graph metrics such as degree centrality and shortest paths.

- The design of Groq prioritizes high performance, enabling it to handle large-scale graph data efficiently.

Optimizations for performance and scalability are also built-in. - As Groq is built on top of Python, it provides a Python API, making integration with other Python data science tools and libraries straightforward.

Identifying the Problem

Groq’s mission is to solve a problem that other prominent services have yet to address. Companies like Grok xAI, OpenAI, Google Gemini AI, and Meta’s Llama series have established themselves as leaders in inference capabilities, focusing on accuracy, problem-solving, and reasoning.

However, the speed of inference has been a notable omission. This is where Groq’s innovative chip architecture comes into play.

Groq has launched a cloud service that comes with AI tools, including a free trial that allows you to see its capabilities for yourself. Today, we’ll be taking a closer look at Groq’s ecosystem.

Groq’s Ecosystem

Groq has recently released a suite of Python and JavaScript libraries that simplify integrating its services. Notably, Groq’s API definition mirrors OpenAI’s, making it easy to get started and test within existing large language model (LLM) apps.

Integration tools like Langchain and LlamaIndex provide a smooth onboarding experience for building and integrating LLM applications with Retrieval-Augmented Generation (RAG) capabilities.

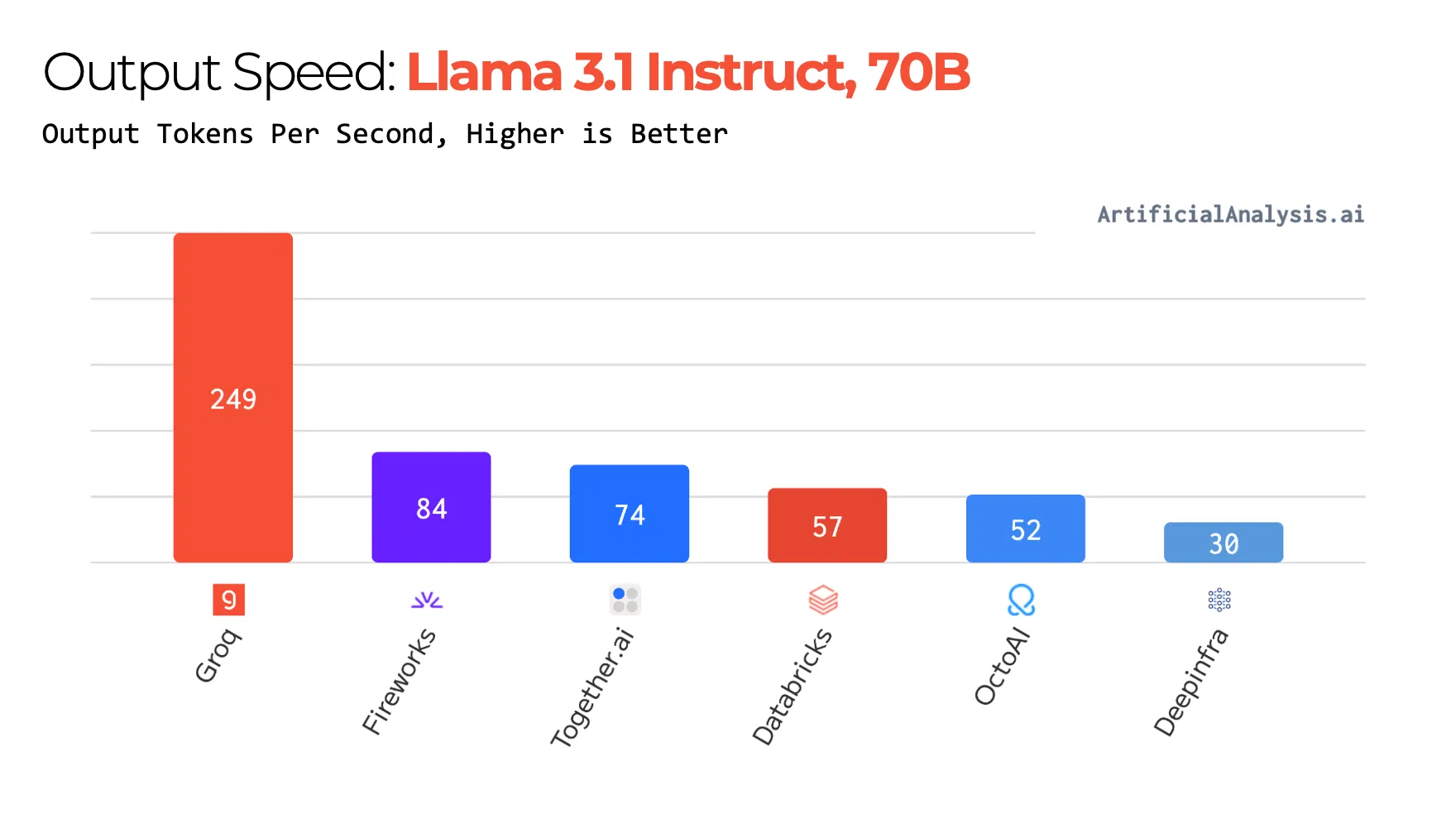

Groq’s Speed for Openly-Available AI Models

Source: Groq

Groq supports the leading open source AI models from major companies like Meta, Mixtral, Google, and OpenAI.

In comparison to its peers, such as Together.ai and OctoAI, Groq’s LPU chip significantly outperforms them, as evident in the image above.

AI Models Provided by Groq

AI models provided by Groq:

| Model | Details |

|---|---|

| Llama 3.3 405B | Offline due to overwhelming demand, though I was able to test it. |

| Distil-Whisper English |

|

| Gemma 2 9B |

|

| Gemma 7B |

|

| Llama 3 Groq 70B Tool Use (Preview) |

|

| Llama 3 Groq 8B Tool Use (Preview) |

|

| Llama 3.3 70B |

|

The Llama 3.3 models represent a major breakthrough in terms of capability and functionality. As the largest and most powerful openly available Large Language Model to date, the Llama 3.3 405B model rivals top industry models that were previously closed-source such as the latest OpenAI o1-preview, Claude 3.7 Sonnet from Claude AI API, etc.

This milestone makes it possible for the first time for enterprises, startups, researchers, and developers to tap into a model of this scale without restrictive proprietary constraints, ultimately driving innovation and unprecedented collaboration.

Llama 3.3 405B can now run at unprecedented speeds through GroqCloud, enabling AI innovators to develop more sophisticated and robust applications.

I was able to test the Llama 3.3 405B model for a short time before they made it offline. It was amazing and when I used it via Groq API, the results were instantly fast!

Getting Started with Groq API

Groq offers a free trial that allows users to test the openly-available AI models, paired with the instant speed of their LPU chip, through both GroqCloud and Groq API.

But in this article, let’s explore the open source AI models with Groq API.

1. Create a Groq Account

To get started, you’ll need to create an account on the Groq platform if you don’t already have one.

As part of this process, you may be asked to provide some basic information, including your name, email address, and a brief description of how you plan to use their services.

Skip this part if you already have an account.

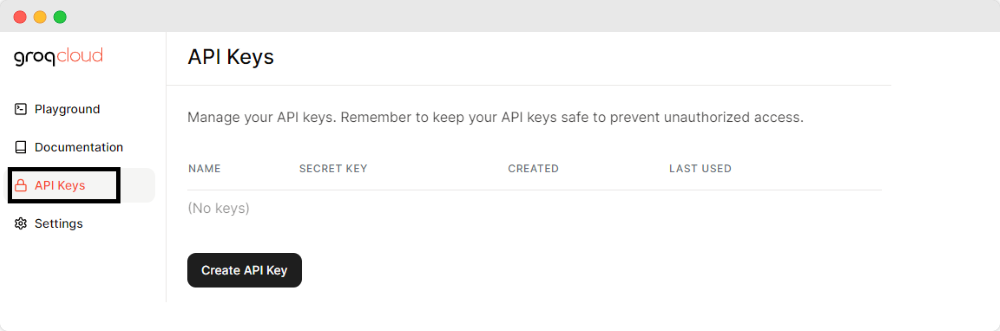

2. Login to the Groq Cloud

Login to your account, and to access API keys on the Groq Cloud, go to the Groq Cloud and then click on API keys.

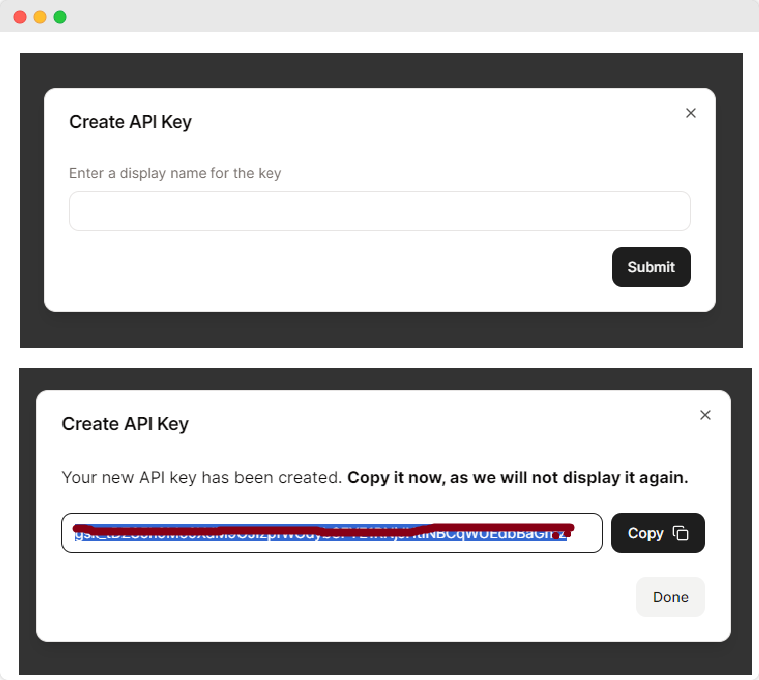

3. Create a New Groq API Key

To create an API key, go to the API key section and look for the option to create a new key. Although the exact process might vary slightly from one platform to another, it typically involves the following steps:

- Click on the “Create API Key” button or link.

- Give your API key a name, which is optional but helpful for organization if you plan on creating multiple keys.

- Set the permissions or scope for your API key if required. This determines what actions the key can and cannot perform.

Once you’ve generated an API key, it will be displayed on your screen. Be sure to copy and store this key securely, as you may only have one opportunity to view it due to security measures in place to protect it.

Testing Llama 3.3 70B AI Model with Groq API Using Python

The best way to get started with Groq API is via their own Groq Quickstart documentation.

In this article, we’ll explore the speed and effectiveness of using open source AI models through the Groq API, which utilizes its LPU chip, using Python programming.

Fortunately, Groq also offers an SDK and is compatible with the OpenAI chat completion method. To begin working with Python, the initial step is to install the Groq Python library by running the following command:

pip install groq

Depending on what you’re gonna build, you can import all necessary libraries later.

In this article, let’s try to summarize a long text using Llama 3.3 70B. Follow this guide step by step.

Summarize a Long Text with Llama 3.3 70B AI Model via Groq API in Python

This section will guide you through using the Llama 3.3 70B AI model via the Groq API to generate summaries of lengthy texts.

We’ll break down the provided Python code into manageable steps, explaining each component and its role in effective text summarization.

Integrating the Groq API with the Llama 3.3 70B model gives advanced AI models powerful text summarization capabilities.

This tutorial explains how to set up the Groq API with the Llama 3.3 70B model, allowing for effective text summarization.

Constructing the Python Code

We are performing the following tasks:

- Initialize the Groq Client: Sets up the API client with your API key.

- Create a Completion Request: Sends a request to the Groq API to generate a summary based on the provided text.

- Print the Summary: Outputs the summary received from the API.

Step-by-Step Code Explanation of the Python Script

Let’s break down the code and understand each part in detail.

Importing the Groq Library

from groq import Groq

The Groq class from the groq library is imported. This class handles the API interactions with Groq, including sending requests and receiving responses.

Initializing the Groq Client

client = Groq(

api_key= '{Groq API Key here}', # Put your API key here

)

Here, we initialize the Groq client with your API key. Replace '{Groq API Key here}' with your actual Groq API key. This step authenticates your requests to the Groq API.

Creating the Completion Request

completion = client.chat.completions.create(

model="llama-3.1-70b-versatile", # Change it with the model ID

messages=[

{

"role": "system",

"content": "##Task\nYou are an expert in summarizing text. Your task is to extract essential insights and generate a summary of the provided piece of text... Make sure you analyze the content thoroughly and identify key insights, so that you generate a well structured summary covering all the key points and demonstrating a deep understanding of the content.\n\n##Input\n- Text to Summarize: ```{input}```\n- Format: paragraphs\n\n##Output\n- Generate a concise summary with the main ideas presented in 1-2 paragraphs, and continue with other paragraphs.\n- The summary should be coherent and maintain the overall tone and style of the input text.\n- Keep the language accessible, avoiding overly technical or complex phrasing.\n- Do not include any additional explanations, introductions like \"Here is a concise summary of the provided text in paragraphs:\", or concluding statements. Strictly follow this instruction and format."

},

{

"role": "user",

"content": "A Large Language Model (LLM) is a type of artificial intelligence (AI) designed to process and generate human-like language. LLMs are trained on massive amounts of text data, which enables them to learn patterns, relationships, and structures of language. This training data can come from various sources, including books, articles, research papers, websites, and social media platforms. By analyzing and processing this vast amount of text data, LLMs can develop a deep understanding of language, including grammar, syntax, semantics, and pragmatics."

}

],

temperature=1,

max_tokens=1024,

top_p=1,

stream=False,

stop=None,

)

Here’s the detailed explanations of each part:

model: Specifies the AI model to use, in this case,"llama-3.1-70b-versatile". Ensure you use the correct model ID provided by Groq.messages: Contains the conversation context for the API request. It includes:- System Message: Provides instructions to the AI model about the task, including how to format the summary and the nature of the input text.

- User Message: The actual content to summarize.

temperature: Controls the randomness of the output. A value of1means the output is more creative, while0would make it more deterministic.max_tokens: Limits the number of tokens (words or characters) in the response. Adjust this based on the length of the expected summary.top_p: Controls the diversity of the output. Setting it to1means all possible tokens are considered.stream: Whether to stream the response.Falsemeans the entire response is returned at once.stop: Defines stop sequences that, when encountered, will end the generation.Nonemeans no specific stopping criteria.

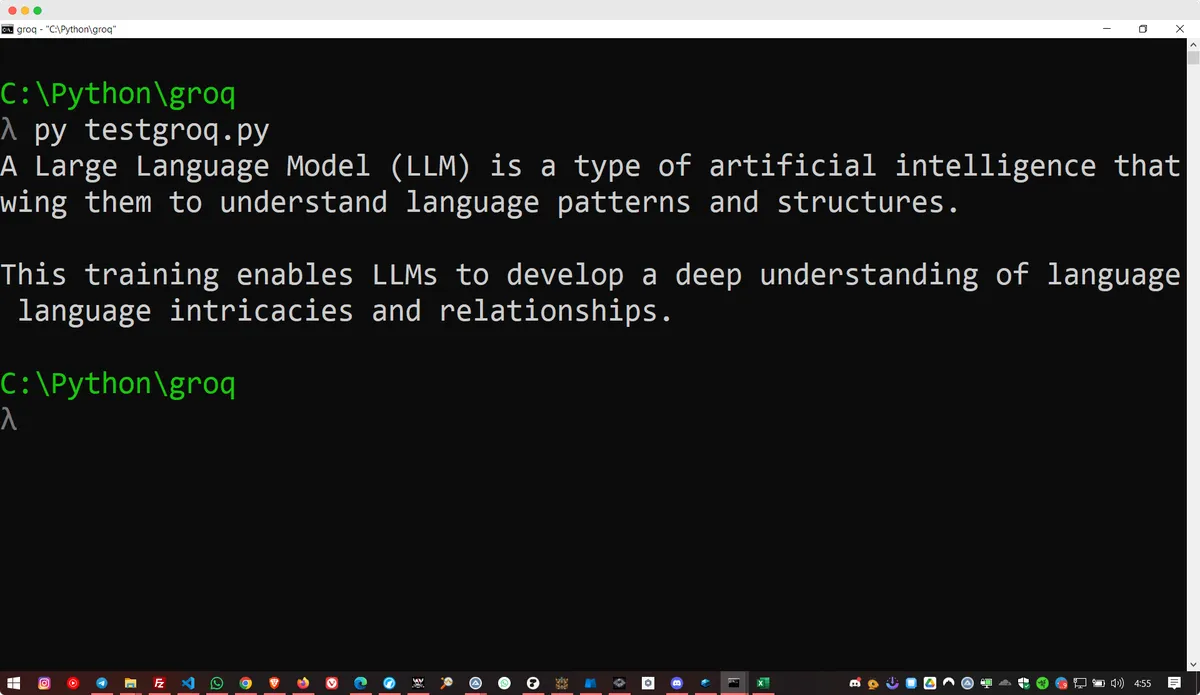

Printing the Summary

The following line will print the summarized text in the terminal as shown above:

print(completion.choices[0].message.content)

Finally, this line prints the summary generated by the AI model. It accesses the first choice from the completion response and extracts the content of the summary.

Testing the Summarizer

To test the summarizer:

- Replace the API Key: Insert your Groq API key in the

api_keyfield. - Update the Model ID: Ensure that the

modelfield contains the correct ID for the Llama 3.3 70B model. - Run the Script: Execute the script in your Python environment. For instance:

python main.py - Review the Output: Check the printed summary to ensure it meets your expectations.

Customizing the Summarizer

You can customize the summarizer by adjusting the parameters or modifying the prompt in the messages array:

- Modify the Prompt: Change the

contentof the system message to refine the summarization instructions. - Adjust Parameters: Tweak

temperature,max_tokens, andtop_pbased on your needs.

Putting It All Together

Here’s the Python script to summarize a long text with Groq API using Llama 3.3 70B AI model:

from groq import Groq

client = Groq(

api_key= '{Groq API Key here}', # Put your API key here

)

completion = client.chat.completions.create(

model="llama-3.1-70b-versatile", # Change it with the model ID

messages=[

# Change your summarization prompt here

{

"role": "system",

"content": "##Task\nYou are an expert in summarizing text. Your task is to extract essential insights and generate a summary of the provided piece of text... Make sure you analyze the content thoroughly and identify key insights, so that you generate a well structured summary covering all the key points and demonstrating a deep understanding of the content.\n\n##Input\n- Text to Summarize: ```{input}```\n- Format: paragraphs\n\n##Output\n- Generate a concise summary with the main ideas presented in 1-2 paragraphs, and continue with other paragraphs.\n- The summary should be coherent and maintain the overall tone and style of the input text.\n- Keep the language accessible, avoiding overly technical or complex phrasing.\n- Do not include any additional explanations, introductions like \"Here is a concise summary of the provided text in paragraphs:\", or concluding statements. Strictly follow this instruction and format."

},

# Text to summarize here

{

"role": "user",

"content": "A Large Language Model (LLM) is a type of artificial intelligence (AI) designed to process and generate human-like language. LLMs are trained on massive amounts of text data, which enables them to learn patterns, relationships, and structures of language. This training data can come from various sources, including books, articles, research papers, websites, and social media platforms. By analyzing and processing this vast amount of text data, LLMs can develop a deep understanding of language, including grammar, syntax, semantics, and pragmatics."

}

],

temperature=1,

max_tokens=1024,

top_p=1,

stream=False,

stop=None,

)

print(completion.choices[0].message.content)Using the Llama 3.3 70B AI model through the Groq API provides a robust solution and instantly fast for many tasks, especially summarizing long texts as we’ve demonstrated above.

By following the steps outlined in this guide, you can efficiently integrate AI-driven tools, from basic to advance, into your Python applications.

Final Thoughts

To explore more about Groq API, you can customize the summarizer or create another tool to fit your specific needs and test it thoroughly to ensure it performs as expected.

With the capabilities and open source AI models offered by Groq, you can perform so many tasks with ease involving large language models.